Android Application Architecture 安卓APP架構[譯]

序

本文介紹了文章作者從事了幾年android應用的開發,經歷2次架構變革,第一次集成了RxJava第二次集成了MVP,并將RxJava與MVP完美結合,實現了低耦合,代碼簡單,測試方便的架構。

其實我們在開發中也遇到過,Android入門門檻較低,如果前期對APP規劃不清晰,Coder們對未來變化把握不準,技術架構經驗不夠強大,最終導致就是一個Activity幾千行,里面寫了大量的Private方法,拆成幾個Fragment、封裝出來幾個類都是無法解決,結果就是看Activity難受的要死,糾結,看了不爽改也不是不改也不是,嚴重影響看的人的心情。并且怨天尤人這個是產品人員規劃App不好,沒有前瞻性,改來改去。。。

這篇文章就是使用新的結構解決該問題。

安卓APP架構

Android Application Architecture

Our journey from standard Activities and AsyncTasks to a modern MVP-based architecture powered by RxJava.

這篇文章主要目的是講述如何將傳統的Activities 與 AsyncTasks 模式向目前主流的MVP架構基礎的響應式編程框架過度。

Different parts of a software codebase should be independent, yet perfectly work together like a well-oiled machine — photo by Chester Alvarez.

先暢享一下:~~~如果松耦合架構,分工明確,然后完美的組合在一起工作是一個很吊的事情。

(轉個圖片還要寫明白誰拍的,版權意識真強)

The Android dev ecosystem moves very quickly. Every week new tools are created, libraries are updated, blog posts are written and talks are given. If you go on holiday for a month, by the time you come back there will be a new version of the support library and/or Play Services.

最近幾年Android的生態鏈變化非常迅速,從底層的Android Api到應用層的各種開源的類庫、工具更新非常迅速。一不留神就落后了。

I’ve been making Android apps with the ribot team for over three years. During this time, the architecture and technologies we’ve used to build Android apps have been continuously evolving. This article will take you through this journey by explaining our learnings, mistakes and the reasoning behind these architectural changes.

我在Ribot團隊從事Android應用開發工作三年多,伴隨著公司技術的不斷創新,積累了很多經驗、錯誤以及在技術選型背后的故事。

舊的應用架構

The old times

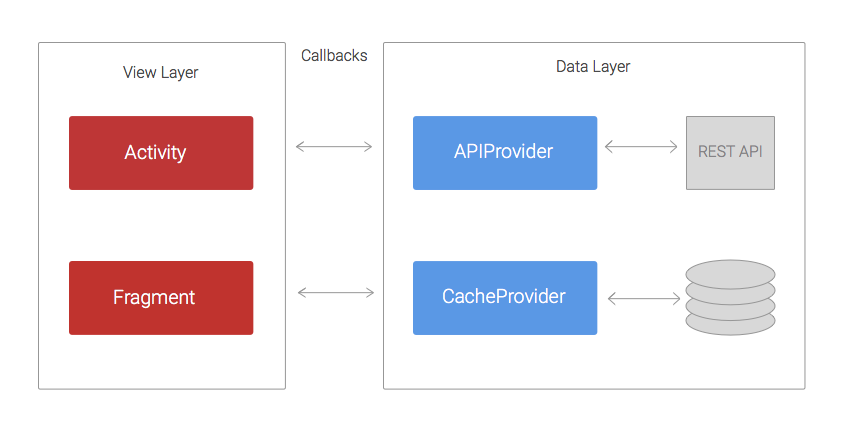

Back in 2012 our codebases used to follow a basic structure. We didn’t use any networking library and AsyncTasks were still our friends. The diagram below shows approximately how the architecture was.

2012年那個時候,我們的代碼都是用的原生Android,沒有使用任何的網絡請求框架,而是基于AsyncTasks書寫。 >The code was structured in two layers: the data layer that was in charge of retrieving/saving data from REST APIs and persistent data stores; and the view layer, whose responsibility was handling and displaying the data on the UI.

>The code was structured in two layers: the data layer that was in charge of retrieving/saving data from REST APIs and persistent data stores; and the view layer, whose responsibility was handling and displaying the data on the UI.

The APIProvider provides methods to enable Activities and Fragments to easily interact with the REST API. These methods use URLConnection and AsyncTasks to perform network calls in a separate thread and return the result to the Activities via callbacks.

代碼分為兩層,Data與View,Data層主要是用來從API獲取數據,保存到持久化的db當中。View層主要就是把Data的數據顯示到UI上。APIProvider提供方法出來,用于在Activity或者Fragment中方便的進行控制與交互。技術上將,使用URLConnection與AsyncTasks實現了一個異步的網絡請求并將結果返回到調用的回調方法里面。

In a similar way, the CacheProvider contains methods that retrieve and store data from SharedPreferences or a SQLite database. It also uses callbacks to pass the result back to the Activities.

相同的原理CacheProvider提供一系列方法,將SharedPreferences或者SQLite的數據取出來,并且返回給到Activity

問題

The problems

The main issue with this approach was that the View layer had too many responsibilities. Imagine a simple common scenario where the application has to load a list of blog posts, cache them in a SQLite database and finally display them on a ListView. The Activity would have to do the following:

主要問題是View層有太多的累贅,以一個博客列表為例來講述,比如博客需要顯示一個ListView,從SQLite讀取數據,Activity需要做到以下幾點:

- Call a method loadPosts(callback) in the APIProvider

- Wait for the APIProvider success callback and then call savePosts(callback) in the CacheProvider.

- Wait for the CacheProvider success callback and then display the posts on the ListView.

- Separately handle the two potential errors callback from the APIProvider and CacheProvider.

- 執行APIProvider里面的loadPosts的方法,里面傳入回調參數內容。

- 等待loadPosts執行成功后,執行回調里面的CacheProvider中的savePosts方法,savePosts也要傳入回調參數。

- 等待savePosts執行成功后,執行回調里面的方法刷新ListView

- 分別書寫代碼處理2 3 兩步的錯誤回調內容。

This is a very simple example. In a real case scenario the REST API will probably not return the data like the view needs it. Therefore, the Activity will have to somehow transform or filter the data before showing it. Another common case is when the loadPosts() method takes a parameter that needs to be fetched from somewhere else, for example an email address provided by the Play Services SDK. It’s likely that the SDK will return the email asynchronously using a callback, meaning that we now have three levels of nested callbacks. If we keep adding complexity, this approach will result into what is known as callback hell.

這還是一個比較簡單的例子,在一些真實的場景中,遠程的API可能沒有返回程序的必須值,但是activity必須把數據處理完成之后才能顯示結果。再一個例子就是如果loadPosts方法需要借助一些其他地方的返回參數時,類似用多線程去實現同步請求,為保證數據正常請求,意味著必須做一個三層的回調,如果再復雜一些,想理清楚這些回調就是很蛋疼的事情。

In summary:

Activities and Fragments become very large and difficult to maintain

Too many nested callbacks means the code is ugly and difficult to understand so painful to make changes or add new features.

Unit testing becomes challenging, if not impossible, because a lot of the logic lives within the Activities or Fragments that are arduous to unit test.

總之,回調多了之后,Activity與Fragment會亂的要死,并且一般人無法直視。

牛逼的新架構出來了

A new architecture driven by RxJava

We followed the previous approach for about two years. During that time, we made several improvements that slightly mitigated the problems described above. For example, we added several helper classes to reduce the code in Activities and Fragments and we started using Volley in the APIProvider. Despite these changes, our application code wasn’t yet test-friendly and the callback hell issue was still happening too often.

我們在蛋疼的架構中煎熬了2年,當然也嘗試過很多方式,最終也只能是緩和一下亂的問題。我們在APIProvider使用了Volley,代替了AsyncHttpClient,但是其實是一個吊樣。

It wasn’t until 2014 when we started reading about RxJava. After trying it on a few sample projects, we realised that this could finally be the solution to the nested callback problem. If you are not familiar with reactive programming you can read this introduction. In short, RxJava allows you to manage data via asynchronous streams and gives you many operators that you can apply to the stream in order to transform, filter or combine the data.

不到2014年我們就開始進行RxJava的預研,然后嘗試了一批簡單的項目,感覺RxJava的方式是解決我們嵌套回調的終極解決辦法。簡單的說,RxJava允許你通過異步流的方式管理你的數據,并且還可以通過操作符(Operators)對Observable對象的變換

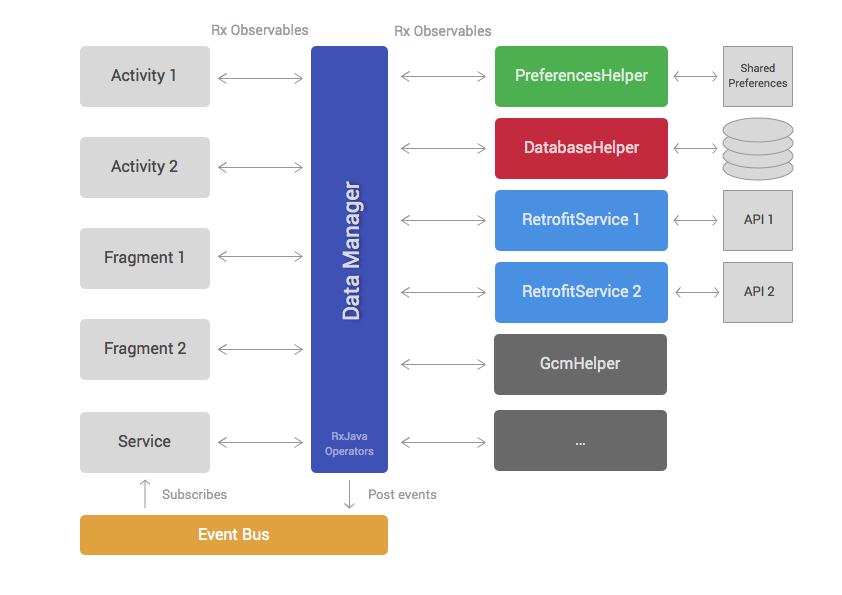

Taking into account the pains we experienced in previous years, we started to think about how the architecture of a new app would look. So we came up with this.

我們用了幾年的經驗痛定思痛,搞了下面這么個東西,新的APP的架構圖

Similar to the first approach, this architecture can be separated into a data and view layer. The data layer contains the DataManager and a set of helpers. The view layer is formed by Android framework components like Fragments, Activities, ViewGroups, etc.

與第一種方法相似,這個架構也是分為Data層與View層,Data層包含DataManager與一堆Helper;View層是包含Fragments, Activities, ViewGroups等。

Helper classes (third column on diagram) have very specific responsibilities and implement them in a concise manner. For example, most projects have helpers for accessing REST APIs, reading data from databases or interacting with third party SDKs. Different applications will have a different number of helpers but the most common ones are:

Helper主要是集成第三方的類庫,以便于在代碼中幾行代碼就可以清晰的實現某個功能,比如請求API,訪問數據庫等,雖然不同的應用程序都有不同的類庫,但是他們無非就是以下這些內容:

- PreferencesHelper: reads and saves data in SharedPreferences.

- DatabaseHelper: handles accessing SQLite databases.

- Retrofit services: perform calls to REST APIs. We started using Retrofit instead of Volley because it provides support for RxJava. It’s also nicer to use.

- 從SharedPreferences中讀取或者寫入數據

- 讀寫SQLite數據庫

- 類似與square的Retrofit服務,也就是Http Client,我們用Restrofit替代了Volley因為他支持Rxjava,并且更吊。

Most of the public methods inside helper classes will return RxJava Observables.

The DataManager is the brain of the architecture. It extensively uses RxJava operators to combine, filter and transform data retrieved from helper classes. The aim of the DataManager is to reduce the amount of work that Activities and Fragments have to do by providing data that is ready to display and won’t usually need any transformation.

RxJava最核心的兩個東西是Observables(被觀察者,事件源)和Subscribers(觀察者),在Helper類中的Public方法,一般都會返回一個RxJava的Observables;DataManager是整個架構的大腦,他大量的使用Rxjava的operators對Helper返回來的數據進行的整合過濾、二次處理。

The code below shows what a DataManager method would look like. This sample method works as follows:

下面用一個例子來說明DataManager是做什么的:

- Call the Retrofit service to load a list of blog posts from a REST API

- Save the posts in a local database for caching purposes using the DatabaseHelper.

- Filter the blog posts written today because those are the only ones the view layer wants to display.

- 調用Retrofit的服務,去請求一個博客列表的API

- 用DatabaseHelper保存這些數據到數據庫

- 過濾出這些BLOG哪些是今天寫的,然后顯示到UI界面上。

Components in the view layer such as Activities or Fragments would simply call this method and subscribe to the returned Observable. Once the subscription finishes, the different Posts emitted by the Observable can be directly added to an Adapter in order to be displayed on a RecyclerView or similar.

Observables發出一系列事件,Subscribers(例如 Activities or Fragments)處理這些事件,可以直接將數據顯示到一些可以回收、重用的View上面。

【BTW:如果一個Observerble沒有任何的的Subscriber,那么這個Observable是不會發出任何事件的】

The last element of this architecture is the event bus. The event bus allows us to broadcast events that happen in the data layer, so that multiple components in the view layer can subscribe to these events. For example, a signOut() method in the DataManager can post an event when the Observable completes so that multiple Activities that are subscribed to this event can change their UI to show a signed out state.

這個架構的另外一個模塊是event bus,event bus可以讓我們在Data層發出廣播(不是Android的Broadcast)然后不同的模塊去注冊并接收不同的廣播事件

Why was this approach better?

RxJava Observables and operators remove the need for having nested callbacks.

為什么這個方式這么牛逼,是因為Observables與operators可以去掉那一堆必須的回調方法

The DataManager takes over responsibilities that were previously part of the view layer. Hence, it makes Activities and Fragments more lightweight.

Moving code from Activities and Fragments to the DataManager and helpers means that writing unit tests becomes easier.

DataManager替代了傳統架構中很多代碼,從而使得Activity與Fragment變得更加輕量級。并且使得單元測試變得更加簡單。

Clear separation of responsibilities and having the DataManager as the only point of interaction with the data layer, makes this architecture test-friendly. Helper classes or the DataManager can be easily mocked.

DataManager成為了唯一的數據交互部分,這樣清晰的架構使得更方便進行代碼自測。

What problems did we still have?

For large and very complex projects the DataManager can become too bloated and difficult to maintain.

Although view layer components such as Activities and Fragments became more lightweight, they still have to handle a considerable amount of logic around managing RxJava subscriptions, analysing errors, etc.

我們還有什么問題?

- 如果對于非常龐大并且復雜的項目來說,DataManger也會變得非常臃腫并且難以維護。

- 盡管Activity與Fragment已經變得更加輕量級,但是對于錯誤異常的處理還是要在subscriptions的地方去書寫。

一體化的MVP模式

Integrating Model View Presenter

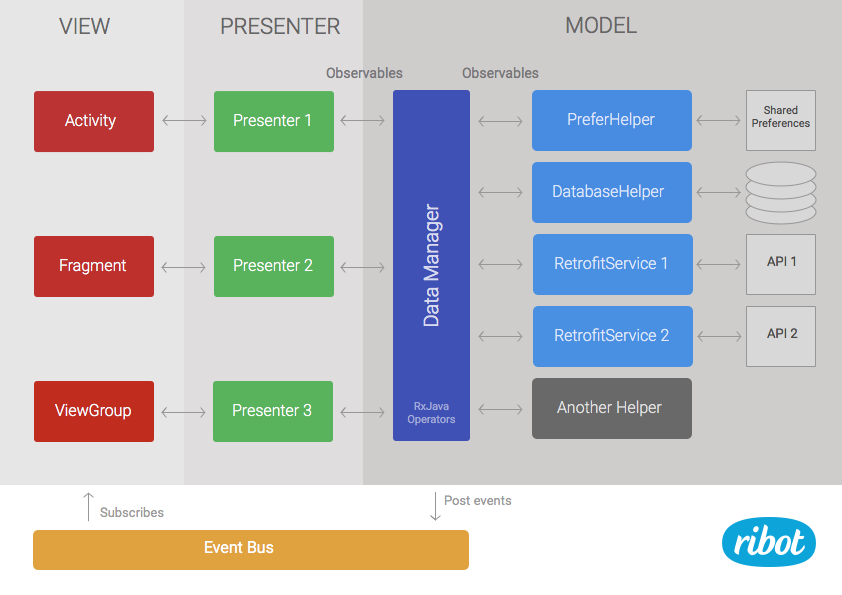

In the past year, several architectural patterns such as MVP or MVVM have been gaining popularity within the Android community. After exploring these patterns on a sample project and article, we found that MVP could bring very valuable improvements to our existing approach. Because our current architecture was divided in two layers (view and data), adding MVP felt natural. We simply had to add a new layer of presenters and move part of the code from the view to presenters.

前幾年開始,很多類似MVP與MVVM在Android的一些社區比較流行,經過研究之后,我們發現MVP模式是對我們目前的方案最有價值的改動。我們的兩層架構View-Data與MVP的 Model-View架構天然融合,理念一致。我們只需要增加一個presenters層,然后把之前在view實現的代碼移到上面就可以了。

The data layer remains as it was but it’s now called model to be more consistent with the name of the pattern.

Presenters are in charge of loading data from the model and calling the right method in the view when the result is ready. They subscribe to Observables returned by the data manager. Therefore, they have to handle things like schedulers and subscriptions. Moreover, they can analyse error codes or apply extra operations to the data stream if needed. For example, if we need to filter some data and this same filter is not likely to be reused anywhere else, it may make more sense to implement it in the presenter rather than in the data manager.

之前的Data層就是現在的MVP中的Model,Presenter現在負責從Model中加載數據,加載完成后后再去調用左邊的在Activity、ViewGroup中的方法。Presenters的subscribe去接收data manager中的Observables廣播出來的數據。

舉例說明,如果我們需要增加數據的過濾操作但是并不是所有地方都需要的那種,那就可以在presenter里面寫這些代碼,而不用寫在公共的datamanager里面。

Below you can see what a public method in the presenter would look like. This code subscribes to the Observable returned by the dataManager.loadTodayPosts() method we defined in the previous section.

我們定義的dataManager.loadTodayPosts()會廣播出數據給到對應的subscribes

The mMvpView is the view component that this presenter is assisting. Usually the MVP view is an instance of an Activity, Fragment or ViewGroup.

MVP的View并不是指的Android的View,而是一個界面組件的的實例,例如Activity, Fragment , ViewGroup 在注冊presenter的時候,需要把自己當前的實例傳遞進去。

// Activity onCreate 中的代碼段 if (presenter == null) presenter = new Presenter1(); presenter.onTakeView(this); Like the previous architecture, the view layer contains standard framework components like ViewGroups, Fragments or Activities. The main difference is that these components don’t subscribe directly to Observables. They instead implement an MvpView interface and provide a list of concise methods such as showError() or showProgressIndicator(). The view components are also in charge of handling user interactions such as click events and act accordingly by calling the right method in the presenter. For example, if we have a button that loads the list of posts, our Activity would call presenter.loadTodayPosts() from the onClick listener.

這個架構與上一個架構不同的是,ViewLayer 也就是Activity這些,不會直接去訂閱接收Observables發出的這些事件。而是只在Activity實現幾個簡單的顯示錯誤、顯示進度的方法(用接口interface來規范統一),然后把當前實例以參數形式傳遞給到對應事件的Presenter,由Presenter去執行這些顯示錯誤、顯示進度的方法。

當然對于用戶交互部分的按鈕點擊事件還是要在Activity中進行處理。

If you want to see a full working sample of this MVP-based architecture, you can check out our Android Boilerplate project on GitHub. You can also read more about it in the ribot’s architecture guidelines.

關于MVP的文章可以自行百度一下,MVP Android 關鍵詞

Why is this approach better?

為什么這個又最吊

- Activities and Fragments become very lightweight. Their only responsibilities are to set up/update the UI and handle user events. Therefore, they become easier to maintain.

- We can now easily write unit tests for the presenters by mocking the view layer. Before, this code was part of the view layer so we couldn’t unit test it. The whole architecture becomes very test-friendly.

- If the data manager is becoming bloated, we can mitigate this problem by moving some code to the presenters.

- Activity與Fragment代碼量大大降低,邏輯代碼全部都丟給了Presenter,結果就是Activity只需要負責UI交互的按鈕等代碼。

- 對于Presenter可以寫單獨的單元測試代碼,只需要對Presenter提供的方法測試即可

- 如果DataManager變得臃腫龐大了,我們可以分離這些代碼到各自的Presenter中去。

What problems do we still have?

現在還有遺留什么問題Having a single data manager can still be an issue when the codebase becomes very large and complex. We haven’t reached the point where this is a real problem but we are aware that it could happen.

只有一個DataManager仍舊是一個問題,尤其是當代碼項目比較龐大的時候,當然我們還沒有到達這個龐大的地步,盡管我們知道這個將來某天會發生。

It’s important to mention that this is not the perfect architecture. In fact, it’d be naive to think there is a unique and perfect one that will solve all your problems forever. The Android ecosystem will keep evolving at a fast pace and we have to keep up by exploring, reading and experimenting so that we can find better ways to continue building excellent Android apps.

如果想有個完美的架構解決你所有問題是不可能的。TMD Android的整個生態圈變化太快,又TM的不標準,就導致我們不斷的去探索探索。。。以致于去找到更吊的方法去做Android apps。

I hope you enjoyed this article and you found it useful. If so, don’t forget to click the recommend button. Also, I’d love to hear your thoughts about our latest approach.

希望讀了之后對我們的最新解決方案能有些建議想法。

【本文翻譯的目的是在閑暇時間,研究新技術,用通俗技術語言寫給自己看,便于日后方便查閱為目】

原文:https://medium.com/ribot-labs/android-application-architecture-8b6e34acda65

MVP介紹:http://www.jcodecraeer.com/a/anzhuokaifa/androidkaifa/2015/0425/2782.html

RxAndroid:https://github.com/ReactiveX/RxAndroid

Eventbus:https://github.com/greenrobot/EventBus

posted @ 2015-12-18 13:07 paulwong 閱讀(653) | 評論 (0) | 編輯 收藏