|

#

在分布式算法領域,有個非常重要的算法叫Paxos, 它的重要性有多高呢,Google的Chubby [1]中提到

all working protocols for asynchronous consensus we have so far encountered have Paxos at their core.

關于Paxos算法的詳述在維基百科中有更多介紹,中文版介紹的是choose value的規則[2],英文版介紹的是Paxos 3 phase commit的流程[3],中文版不是從英文版翻譯而是獨立寫的,所以非常具有互補性。Paxos算法是由Leslie Lamport提出的,他在Paxos Made Simple[4]中寫道

The Paxos algorithm, when presented in plain English, is very simple.

當你研究了很長一段時間Paxos算法還是有點迷糊的時候,看到上面這句話可能會有點沮喪。但是公認的它的算法還是比較繁瑣的,尤其是要用程序員嚴謹的思維將所有細節理清的時候,你的腦袋里更是會充滿了問號。Leslie Lamport也是用了長達9年的時間來完善這個算法的理論。

實際上對于一般的開發人員,我們并不需要了解Paxos所有細節及如何實現,只需要知道Paxos是一個分布式選舉算法就夠了。本文主要介紹一下Paxos常用的應用場合,或許有一天當你的系統增大到一定規模,你知道有這樣一個技術,可以幫助你正確及優雅的解決技術架構上一些難題。

1. database replication, log replication等, 如bdb的數據復制就是使用paxos兼容的算法。Paxos最大的用途就是保持多個節點數據的一致性。

2. naming service, 如大型系統內部通常存在多個接口服務相互調用。

1) 通常的實現是將服務的ip/hostname寫死在配置中,當service發生故障時候,通過手工更改配置文件或者修改DNS指向的方法來解決。缺點是可維護性差,內部的單元越多,故障率越大。

2) LVS雙機冗余的方式,缺點是所有單元需要雙倍的資源投入。

通過Paxos算法來管理所有的naming服務,則可保證high available分配可用的service給client。象ZooKeeper還提供watch功能,即watch的對象發生了改變會自動發notification, 這樣所有的client就可以使用一致的,高可用的接口。

3.config配置管理

1) 通常手工修改配置文件的方法,這樣容易出錯,也需要人工干預才能生效,所以節點的狀態無法同時達到一致。

2) 大規模的應用都會實現自己的配置服務,比如用http web服務來實現配置中心化。它的缺點是更新后所有client無法立即得知,各節點加載的順序無法保證,造成系統中的配置不是同一狀態。

4.membership用戶角色/access control list, 比如在權限設置中,用戶一旦設置某項權限比如由管理員變成普通身份,這時應在所有的服務器上所有遠程CDN立即生效,否則就會導致不能接受的后果。

5. 號碼分配。通常簡單的解決方法是用數據庫自增ID, 這導致數據庫切分困難,或程序生成GUID, 這通常導致ID過長。更優雅的做法是利用paxos算法在多臺replicas之間選擇一個作為master, 通過master來分配號碼。當master發生故障時,再用paxos選擇另外一個master。

這里列舉了一些常見的Paxos應用場合,對于類似上述的場合,如果用其它解決方案,一方面不能提供自動的高可用性方案,同時也遠遠沒有Paxos實現簡單及優雅。

Yahoo!開源的ZooKeeper [5]是一個開源的類Paxos實現。它的編程接口看起來很像一個可提供強一致性保證的分布式小文件系統。對上面所有的場合都可以適用。但可惜的是,ZooKeeper并不是遵循Paxos協議,而是基于自身設計并優化的一個2 phase commit的協議,因此它的理論[6]并未經過完全證明。但由于ZooKeeper在Yahoo!內部已經成功應用在HBase, Yahoo! Message Broker, Fetch Service of Yahoo! crawler等系統上,因此完全可以放心采用。

另外選擇Paxos made live [7]中一段實現體會作為結尾。

* There are significant gaps between the description of the Paxos algorithm and the needs of a real-world system. In order to build a real-world system, an expert needs to use numerous ideas scattered in the literature and make several relatively small protocol extensions. The cumulative effort will be substantial and the final system will be based on an unproven protocol.

* 由于chubby填補了Paxos論文中未提及的一些細節,所以最終的實現系統不是一個理論上完全經過驗證的系統

* The fault-tolerance computing community has not developed the tools to make it easy to implement their algorithms.

* 分布式容錯算法領域缺乏幫助算法實現的的配套工具, 比如編譯領域盡管復雜,但是yacc, ANTLR等工具已經將這個領域的難度降到最低。

* The fault-tolerance computing community has not paid enough attention to testing, a key ingredient for building fault-tolerant systems.

* 分布式容錯算法領域缺乏測試手段

這里要補充一個背景,就是要證明分布式容錯算法的正確性通常比實現算法還困難,Google沒法證明Chubby是可靠的,Yahoo!也不敢保證它的ZooKeeper理論正確性。大部分系統都是靠在實踐中運行很長一段時間才能謹慎的表示,目前系統已經基本沒有發現大的問題了。

摘要: 簡介: Zookeeper 分布式服務框架是 Apache Hadoop 的一個子項目,它主要是用來解決分布式應用中經常遇到的一些數據管理問題,如:統一命名服務、狀態同步服務、集群管理、分布式應用配置項的管理等。本文將從使用者角度詳細介紹 Zookeeper 的安裝和配置文件中各個配置項的意義,以及分析 Zookeeper 的典型的應用場景(配置文件的管理、集群管理、同步鎖、Leader... 閱讀全文

ibatis dbcp連接數據庫問題(上)

(2007-12-20 22:43:33)

我是懶人,就不自己寫了,就直接引用我找到的兩篇博文:

最近網站會出現一個現象是,在并發量大的時候,Tomcat或JBoss的服務線程會線程掛起,同時服務器容易出現數據連接的 java.net.SocketException: Broken pipe 的錯誤。剛才開始咋一看感覺像是DB端處理不來或是DB端的連接時間到了wait_timeout 的時間強行斷開。出于這兩個目的,網收集了一些資料后,有的說法是在DB的 wait_timeout 時間后斷開的一些連接在連接池中處于空閑狀態,當應用層獲取該連接后進行的DB操作就會發生上面這個錯誤。

但在我查看了DBCP連接池代碼和做了些測試后,發生這種說法并非正確。

1. 首先,出現 Broken pipe 的錯誤不是因連接超時所致,這個錯誤只有在Linux下多發,就是在高并發的情況下,網絡資源不足的情況出現的, 會發送SIGPIPE信號,LINUX下默認程序退出的,具體解決辦法目前還未找到合適的,有的說法是在Linux的環境變量中設置: _JAVA_SR_SIGNUM = 12 基本就可以解決,但經測試結果看并未解決。對于該問題持續關注中。

2. 之后,Broken pipe 問題未徹底解決,那么對于DBCP連接池只好對一些作廢的連接要進行強制回收,若這里不做強制回收的話,最終也就會導致 pool exhausted 了,所以這一步一定要加上保護。配置如下:

- #### 是否在自動回收超時連接的時候打印連接的超時錯誤

- dbcp.logAbandoned=true

- #### 是否自動回收超時連接

- dbcp.removeAbandoned=true

- #### 超時時間(以秒數為單位)

- dbcp.removeAbandonedTimeout=150

3. 對于DB的 wait_timeout 空閑連接時間設置,在超過該時間值的連接,DB端會強行關閉,經測試結果,即使DB強行關閉了空閑連接,對于DBCP而言在獲取該連接時無法激活該連接,會自動廢棄該連接,重新從池中獲取空閑連接或是重新創建連接,從源代碼上看,這個自動完成的激活邏輯并不需要配置任何參數,是DBCP的默認操作。故對于網上的不少說連接池時間配置與DB不協調會導致 Broken pipe 的說法是錯誤,至少對于DBCP是不會出現該問題,也許C3P0是這樣。

不過對于連接池的優化而言,本來就在池里空閑的連接被DB給強行關閉也不件好事,這里可以組合以下幾個配置解決該問題:

java 代碼

- # false : 空閑時是否驗證, 若不通過斷掉連接, 前提是空閑對象回收器開啟狀態

- dbcp.testWhileIdle = true

- # -1 : 以毫秒表示空閑對象回收器由運行間隔。值為負數時表示不運行空閑對象回收器

- # 若需要回收, 該值最好小于 minEvictableIdleTimeMillis 值

- dbcp.timeBetweenEvictionRunsMillis = 300000

- # 1000*60*30 : 被空閑對象回收器回收前在池中保持空閑狀態的最小時間, 毫秒表示

- # 若需要回收, 該值最好小于DB中的 wait_timeout 值

- dbcp.minEvictableIdleTimeMillis = 320000

4. 最后,還有一個就是DBCP的maxWait參數,該參數值不宜配置太大,因為在池消耗滿時,該會掛起線程等待一段時間看看是否能獲得連接,一般到池耗盡的可能很少,若真要耗盡了一般也是并發太大,若此時再掛線程的話,也就是同時掛起了Server的線程,若到Server線程也掛滿了,不光是訪問DB的線程無法訪問,就連訪問普通頁面也無法訪問了。結果是更糕。

這樣,通過以上幾個配置,DBCP連接池的連接泄漏應該不會發生了(當然除了程序上的連接泄漏),不過對于并發大時Linux上的BrokenPipe 問題最好能徹底解決。但是對于并發量大時,Tomcat或JBoss的服務線程會掛起的原因還是未最終定位到原因,目前解決了DBCP的影響后,估計問題可能會是出現在 mod_jk 與 Tomcat 的連接上了,最終原因也有可能是 broken pipe 所致。關注與解決中……

2.ibatis使用dbcp連接數據庫

一、建立數據表(我用的是oracle 9.2.0.1)

prompt PL/SQL Developer import file

prompt Created on 2007年5月24日 by Administrator

set feedback off

set define off

prompt Dropping T_ACCOUNT...

dro p table T_ACCOUNT cascade constraints; (注意:這里由于ISP限制上傳drop,所以加了一個空格)

prompt Creating T_ACCOUNT...

create table T_ACCOUNT

(

ID NUMBER not null,

FIRSTNAME VARCHAR2(2),

LASTNAME VARCHAR2(4),

EMAILADDRESS VARCHAR2(60)

)

;

alter table T_ACCOUNT

add constraint PK_T_ACCOUNT primary key (ID);

prompt Disabling triggers for T_ACCOUNT...

alter table T_ACCOUNT disable all triggers;

prompt Loading T_ACCOUNT...

insert into T_ACCOUNT (ID, FIRSTNAME, LASTNAME, EMAILADDRESS)

values (1, '王', '三旗', 'E_wsq@msn.com');

insert into T_ACCOUNT (ID, FIRSTNAME, LASTNAME, EMAILADDRESS)

values (2, '冷', '宮主', 'E_wsq@msn.com');

commit;

prompt 2 records loaded

prompt Enabling triggers for T_ACCOUNT...

alter table T_ACCOUNT enable all triggers;

set feedback on

set define on

prompt Done.

二、在工程中加入

commons-dbcp-1.2.2.jar

commons-pool-1.3.jar

ibatis-common-2.jar

ibatis-dao-2.jar

ibatis-sqlmap-2.jar

三、編寫如下屬性文件

jdbc.properties

#連接設置

driverClassName=oracle.jdbc.driver.OracleDriver

url=jdbc:oracle:thin:@90.0.12.112:1521:ORCL

username=gzfee

password=1

#<!-- 初始化連接 -->

initialSize=10

#<!-- 最大空閑連接 -->

maxIdle=20

#<!-- 最小空閑連接 -->

minIdle=5

#最大連接數量

maxActive=50

#是否在自動回收超時連接的時候打印連接的超時錯誤

logAbandoned=true

#是否自動回收超時連接

removeAbandoned=true

#超時時間(以秒數為單位)

removeAbandonedTimeout=180

#<!-- 超時等待時間以毫秒為單位 6000毫秒/1000等于60秒 -->

maxWait=1000

四、將上面建立的屬性文件放入classes下

注:如果是用main類測試則應在工程目錄的classes下,如果是站點測試則在web-inf的classes目錄下

五、寫ibatis與DBCP的關系文件

DBCPSqlMapConfig.xml

<?xml version="1.0" encoding="UTF-8" ?>

<!DOCTYPE sqlMapConfig

PUBLIC "-//ibatis.apache.org//DTD SQL Map Config 2.0//EN"

"http://ibatis.apache.org/dtd/sql-map-config-2.dtd">

<sqlMapConfig>

<properties resource ="jdbc.properties"/>

<transactionManager type ="JDBC">

<dataSource type ="DBCP">

<property name ="JDBC.Driver" value ="${driverClassName}"/>

<property name ="JDBC.ConnectionURL" value ="${url}" />

<property name ="JDBC.Username" value ="${username}" />

<property name ="JDBC.Password" value ="${password}" />

<property name ="Pool.MaximumWait" value ="30000" />

<property name ="Pool.ValidationQuery" value ="select sysdate from dual" />

<property name ="Pool.LogAbandoned" value ="true" />

<property name ="Pool.RemoveAbandonedTimeout" value ="1800000" />

<property name ="Pool.RemoveAbandoned" value ="true" />

</dataSource>

</transactionManager>

<sqlMap resource="com/mydomain/data/Account.xml"/> (注:這里對應表映射)

</sqlMapConfig>

六、寫數據表映射文件

Account.xml

<?xml version="1.0" encoding="UTF-8" ?>

<!DOCTYPE sqlMap

PUBLIC "-//ibatis.apache.org//DTD SQL Map 2.0//EN"

"http://ibatis.apache.org/dtd/sql-map-2.dtd">

<sqlMap namespace="Account">

<!-- Use type aliases to avoid typing the full classname every time. -->

<typeAlias alias="Account" type="com.mydomain.domain.Account"/>

<!-- Result maps describe the mapping between the columns returned

from a query, and the class properties. A result map isn't

necessary if the columns (or aliases) match to the properties

exactly. -->

<resultMap id="AccountResult" class="Account">

<result property="id" column="id"/>

<result property="firstName" column="firstName"/>

<result property="lastName" column="lastName"/>

<result property="emailAddress" column="emailAddress"/>

</resultMap>

<!-- Select with no parameters using the result map for Account class. -->

<select id="selectAllAccounts" resultMap="AccountResult">

select * from T_ACCOUNT

</select>

<!-- A simpler select example without the result map. Note the

aliases to match the properties of the target result class. -->

<select id="selectAccountById" parameterClass="int" resultClass="Account">

select

id as id,

firstName as firstName,

lastName as lastName,

emailAddress as emailAddress

from T_ACCOUNT

where id = #id#

</select>

<!-- Insert example, using the Account parameter class -->

<insert id="insertAccount" parameterClass="Account">

insert into T_ACCOUNT (

id,

firstName,

lastName,

emailAddress

values (

#id#, #firstName#, #lastName#, #emailAddress#

)

</insert>

<!-- Update example, using the Account parameter class -->

<update id="updateAccount" parameterClass="Account">

update T_ACCOUNT set

firstName = #firstName#,

lastName = #lastName#,

emailAddress = #emailAddress#

where

id = #id#

</update>

<!-- Delete example, using an integer as the parameter class -->

<delete id="deleteAccountById" parameterClass="int">

delet e from T_ACCOUNT where id = #id# (注意:這里由于ISP限制上傳delete,所以加了一個空格)

</delete>

</sqlMap>

Java Scripting and JRuby Examples

Author: Martin Kuba

The new JDK 6.0 has a new API for scripting languages, which seems to be a good idea. I decided this is a good opportunity to learn the Ruby language :-) But I haven't found a simple example of it using Google, so here it is.

Download and install JDK 6.0. You need version 6, as the scripting support was added in version 6. Then you need to put five files in your classpath:

All you need to do is to add these files into the CLASSPATH.

(If you don't know how to do that, please read the Java tutorial first. Don't forget to include the current directory into the CLASSPATH. On Linux, you can do:

export CLASSPATH=.

for i in *.jar ; do CLASSPATH=$CLASSPATH:$i; done

).

Here is a simple Java code that executes a Ruby script defining some functions and the executing them:

package cz.cesnet.meta.jruby;

import javax.script.ScriptEngine;

import javax.script.ScriptEngineFactory;

import javax.script.ScriptEngineManager;

import javax.script.ScriptException;

import javax.script.ScriptContext;

import java.io.BufferedReader;

import java.io.FileNotFoundException;

import java.io.FileReader;

public class JRubyExample1 {

public static void main(String[] args) throws ScriptException, FileNotFoundException {

//list all available scripting engines

listScriptingEngines();

//get jruby engine

ScriptEngine jruby = new ScriptEngineManager().getEngineByName("jruby");

//process a ruby file

jruby.eval(new BufferedReader(new FileReader("myruby.rb")));

//call a method defined in the ruby source

jruby.put("number", 6);

jruby.put("title", "My Swing App");

long fact = (Long) jruby.eval("showFactInWindow($title,$number)");

System.out.println("fact: " + fact);

jruby.eval("$myglobalvar = fact($number)");

long myglob = (Long) jruby.getBindings(ScriptContext.ENGINE_SCOPE).get("myglobalvar");

System.out.println("myglob: " + myglob);

}

public static void listScriptingEngines() {

ScriptEngineManager mgr = new ScriptEngineManager();

for (ScriptEngineFactory factory : mgr.getEngineFactories()) {

System.out.println("ScriptEngineFactory Info");

System.out.printf("\tScript Engine: %s (%s)\n", factory.getEngineName(), factory.getEngineVersion());

System.out.printf("\tLanguage: %s (%s)\n", factory.getLanguageName(), factory.getLanguageVersion());

for (String name : factory.getNames()) {

System.out.printf("\tEngine Alias: %s\n", name);

}

}

}

}

And here is the Ruby code in file myruby.rb:

def fact(n)

if n==0

return 1

else

return n*fact(n-1)

end

end

class CloseListener

include java.awt.event.ActionListener

def actionPerformed(event)

puts "CloseListere.actionPerformed() called"

java.lang.System.exit(0)

end

end

def showFactInWindow(title,number)

f = fact(number)

frame = javax.swing.JFrame.new(title)

frame.setLayout(java.awt.FlowLayout.new())

button = javax.swing.JButton.new("Close")

button.addActionListener(CloseListener.new)

frame.contentPane.add(javax.swing.JLabel.new(number.to_s+"! = "+f.to_s))

frame.contentPane.add(button)

frame.defaultCloseOperation=javax.swing.WindowConstants::EXIT_ON_CLOSE

frame.pack()

frame.visible=true

return f

end

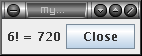

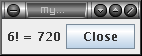

The Ruby script defines a function fact(n) which computes the factorial of a given number. Then it defines a (Ruby) class CloseListener, which extend a (Java) class java.awt.event.ActionListener. And finaly it defines a function showFactInWindow, which builds a GUI window displaying a label and a close button, assigns the CloseListener class as a listener for the button action, and returns the value of n! :

Please note that a Ruby and Java classes can be mixed together.

(To run the example save the codes above into files cz/cesnet/meta/jruby/JRubyExample1.java and myruby.rb, and compile them and run using

javac cz/cesnet/meta/jruby/JRubyExample1.java

java cz.cesnet.meta.jruby.JRubyExample1

)

You can pass any Java object using the put("key",object) method on the ScriptingEngine class, the key becomes a global variable in Ruby, so you can access it using $key. The numerical value returned by showFactInWindow is Ruby's Fixnum clas, which is converted into java.lang.Long and returned by the eval() method.

Any additional global variable in the Ruby script can be obtained in Java by getBindings(), as is shown by getting the $myglobalvar RUby global variable.

In JRuby 0.9.8, it was not possible to override or add methods of Java classes in Ruby and call them in Java. However, in JRuby 1.0 it is possible. If you have read the previous version of this page, please note, that the syntax for extending Java interfaces has changed in JRuby 1.0 to use include instead of <.

This is a Java interface MyJavaInterface.java:

package cz.cesnet.meta.jruby;

public interface MyJavaInterface {

String myMethod(Long num);

}

This is a Java class MyJavaClass.java:

package cz.cesnet.meta.jruby;

public class MyJavaClass implements MyJavaInterface {

public String myMethod(Long num) {

return "I am Java method, num="+num;

}

}

This is a Ruby code example2.rb:

#example2.rb

class MyDerivedClass < Java::cz.cesnet.meta.jruby.MyJavaClass

def myMethod(num)

return "I am Ruby method, num="+num.to_s()

end

end

class MyImplClass

include Java::cz.cesnet.meta.jruby.MyJavaInterface

def myMethod(num)

return "I am Ruby method in interface impl, num="+num.to_s()

end

def mySecondMethod()

return "I am an additonal Ruby method"

end

end

This is the main code.

package cz.cesnet.meta.jruby;

import javax.script.ScriptEngine;

import javax.script.ScriptEngineManager;

import javax.script.ScriptException;

import java.io.BufferedReader;

import java.io.FileNotFoundException;

import java.io.FileReader;

import java.lang.reflect.Method;

public class JRubyExample2 {

public static void main(String[] args) throws ScriptException, FileNotFoundException {

//get jruby engine

ScriptEngine jruby = new ScriptEngineManager().getEngineByName("jruby");

//process a ruby file

jruby.eval(new BufferedReader(new FileReader("example2.rb")));

//get a Ruby class extended from Java class

MyJavaClass mjc = (MyJavaClass) jruby.eval("MyDerivedClass.new");

String s = mjc.myMethod(2l);

//WOW! the Ruby method is visible

System.out.println("s: " + s);

//get a Ruby class implementing a Java interface

MyJavaInterface mji = (MyJavaInterface) jruby.eval("MyImplClass.new");

String s2 = mji.myMethod(3l);

//WOW ! the Ruby method is visible

System.out.println("s2: " + s2);

//however the other methods are not visible :-(

for (Method m : mji.getClass().getMethods()) {

System.out.println("m.getName() = " + m.getName());

}

}

}

The output is

s: I am Ruby method, num=2

s2: I am Ruby method in interface impl, num=3

m.getName() = myMethod

m.getName() = hashCode

m.getName() = equals

m.getName() = toString

m.getName() = isProxyClass

m.getName() = getProxyClass

m.getName() = getInvocationHandler

m.getName() = newProxyInstance

m.getName() = getClass

m.getName() = wait

m.getName() = wait

m.getName() = wait

m.getName() = notify

m.getName() = notifyAll

So you see, a Ruby method overriding a method in a Java class and a Ruby method implementing a method in a Java interface are visible in Java ! However additional methods are not visible in Java.

A useful usage of JRuby in Java is in web applications, when you need to give your user the option to write some complex user-defined conditions. For example, I needed to allow users to specify conditions for other users to access a chat room, based on attributes of the other users provided by autentization system Shibboleth. So I implemented JRuby scripting in TomCat-deployed web application. I used TomCat 6.0, but 5.5 should work the same way. Here are my findings.

First the easy part. Just add the jar files needed for JRuby to WEB-INF/lib/ directory and it just works. Great ! Now your users can enter any Ruby script, and you can provide it with input data using global variables, execute it and read its output value, in a class called from a servlet. In the following example, I needed a boolean value as the output, so the usual Ruby rules for truth values are simulated:

public boolean evalRubyScript() {

ScriptEngine jruby = engineManager.getEngineByName("jruby");

jruby.put("attrs", getWhatEverInputDataYouNeedToProvide());

try {

Object retval = jruby.eval(script);

if (retval instanceof Boolean) return ((Boolean) retval);

return retval != null;

} catch (ScriptException e) {

throw new RuntimeException(e);

}

}

However, your users can type anything. Sooner or later, somebody will type something harmful, like java.lang.System.exit(1) or File.readlines('/etc/passwd') etc. You have to limit what the users can do. Fortunately, there is a security framework in Java, which is not enabled by default, by you can enable it by starting TomCat with the -security option:

$CATALINA_BASE/bin/catalina.sh start -security

That runs the JVM for TomCat with SecurityManager enabled. But alas, your web application most likely will not work with security enabled, as your code or the libraries you use now cannot read system properties, read files etc. So you have to allow them to do it. Edit the file $CATALINA_BASE/conf/catalina.prolicy and add the following code, where you replacemywebapp with the name of your web application:

//first allow everything for trusted libraries, add you own

grant codeBase "jar:file:${catalina.base}/webapps/mywebapp/WEB-INF/lib/stripes.jar!/-" {

permission java.security.AllPermission;

};

grant codeBase "jar:file:${catalina.base}/webapps/mywebapp/WEB-INF/lib/log4j-1.2.13.jar!/-" {

permission java.security.AllPermission;

};

//JSP pages don't compile without this

grant codeBase "file:${catalina.base}/work/Catalina/localhost/mywebapp/" {

permission java.lang.RuntimePermission "defineClassInPackage.org.apache.jasper.runtime";

};

//if you need to read or write temporary file, use this

grant codeBase "file:${catalina.base}/webapps/mywebapp/WEB-INF/classes/-" {

permission java.io.FilePermission "${java.io.tmpdir}/file.ser", "read,write" ;

};

// and now, allow only the basic things, as this applies to all code in your webapp including JRuby

grant codeBase "file:${catalina.base}/webapps/mywebapp/-" {

permission java.util.PropertyPermission "*", "read";

permission java.lang.RuntimePermission "accessDeclaredMembers";

permission java.lang.RuntimePermission "createClassLoader";

permission java.lang.RuntimePermission "defineClassInPackage.java.lang";

permission java.lang.RuntimePermission "getenv.*";

permission java.util.PropertyPermission "*", "read,write";

permission java.io.FilePermission "${user.home}/.jruby", "read" ;

permission java.io.FilePermission "file:${catalina.base}/webapps/mywebapp/WEB-INF/lib/jruby.jar!/-", "read" ;

};

Your webapp will probably not work even after you added this code to the policy file, as your code may need permissions to do other things to work. I have found that the easiest way how to find what's missing is to run TomCat with security debuging enabled:

$ rm logs/*

$ CATALINA_OPTS=-Djava.security.debug=access,failure bin/catalina.sh run -security

...

reproduce the problem by accessing the webapp

...

$ bin/catalina.sh run stop

$ fgrep -v 'access allowed' logs/catalina.out

This will filter out allowed accesses, so what remains are denied accesses:

access: access denied (java.lang.RuntimePermission accessClassInPackage.sun.misc)

java.lang.Exception: Stack trace

at java.lang.Thread.dumpStack(Thread.java:1206)

at java.security.AccessControlContext.checkPermission(AccessControlContext.java:313)

...

access: domain that failed ProtectionDomain (file:/home/joe/tomcat-6.0.13/webapps/mywebapp/WEB-INF/lib/somelib.jar ^lt;no signer certificates>)

That means, that the code in somelib.jar need the RuntimePermission to run, so you have to add it to the catalina.policy file. Then repeat the steps untill you web application runs without problems.

Now the users cannot do dangerous things. If they try to type java.lang.System.exit(1) in the JRuby code, the VM will not exit, instead they will get a security exception:

java.security.AccessControlException: access denied (java.lang.RuntimePermission exitVM.1)

at java.security.AccessControlContext.checkPermission(AccessControlContext.java:323)

at java.security.AccessController.checkPermission(AccessController.java:546)

at java.lang.SecurityManager.checkPermission(SecurityManager.java:532)

The web application is secured now.

摘要: 跳轉到主要內容

登錄 (或注冊)

中文

技術主題

軟件下載

社區

技術講座

developerWorks 中國

Java technology

文檔庫

Java 類的熱替換... 閱讀全文

This tutorial explains how to configure your cluster computers to easily start a set of Erlang nodes on every machine through SSH. It shows how to use the slave module to start Erlang nodes that are linked to a main controler.

Configuring SSH servers

SSH server is generally properly installed and configured by Linux distributions, if you ask for SSH server installation. The SSH server is sometime called sshd, standing for SSH deamon.

You need to have SSH servers running on all your cluster nodes.

Configuring your SSH client: connection without password

SSH client RSA key authentification

To be able to manage your cluster as a whole, you need to set up your SSH access to the cluster nodes so that you can log into them without being prompt for a password or passphrase. Here are the needed steps to configure your SSH client and server to use RSA key for authentification. You only need to do this procedure once, for each client/server.

- Generate an SSH RSA key, if you do not already have one:

- Copy the id_rsa.pub file to the target machine:

scp .ssh/id_rsa.pub userid@ssh2-server:id_rsa.pub

- Connect through SSH on the server:

- Create a .ssh directory in the user home directory (if necessary):

- Copy the contents of the id_rsa.pub file to the authorization file for protocol 2 connections:

cat id_rsa.pub >>$HOME/.ssh/authorized_keys

- Remove the id_rsa.pub file:

Alternatively, you can use the command ssh-copy-id ssh2-server, if it is available on your computer, to replace step 2 to 6. ssh-copy-id is for example available on Linux Mandrake and Debian distributions.

Adding your identity to the SSH-agent software

After the previous step, you will be prompted for the passphrase of your RSA key each time you are initialising a connection. To avoid typing the passphrase many time, you can add your identity to a program called ssh-agent that will keep your passphrase for the work session duration. Use of the SSH protocol will thus be simplified:

- Ensure a program called ssh-agent is running. Type:

to check if ssh-agent is running under your userid. Type:

to check that ssh-agent is linked to your current window manager session or shell process.

- If ssh-agent is not started, you can create an ssh-agent session in the shell with, for example, the screen program:

After this command, SSH actions typed into the screen console will be handle through the ssh-agent.

- Add your identity to the agent:

Type your passphrase when prompted.

- You can list the identity that have been added into the running ssh-agent:

- You can remove an identity from the ssh-agent with:

Please consult ssh-add manual for more options (identity lifetime, agent locking, ...)

Routing to and from the cluster

When setting up clusters, you can often only access the gateway/load balancer front computer. To access the other node, you need to tell the gateway machine to route your requests to the cluster nodes.

To take an example, suppose your gateway to the cluster is 80.65.232.137. The controler machine is a computer outside the cluster. This is computer where the operator is controling the cluster behaviour. Your cluster internal adresses form the following network: 192.0.0.0. On your client computer, launch the command:

route add -net 192.0.0.0 gw 80.65.232.137 netmask 255.255.255.0

|

This will only works if IP forwarding is activated on the gateway computer. |

To ensure proper routing, you can maintain an common /etc/hosts file with entries for all computers in your cluster. In our example, with a seven-computers cluster, the file /etc/hosts could look like:

10.9.195.12 controler

80.65.232.137 gateway

192.0.0.11 eddieware

192.0.0.21 yaws1

192.0.0.22 yaws2

192.0.0.31 mnesia1

192.0.0.32 mnesia2

You could also add a DNS server, but for relatively small cluster, it is probably easier to manage an /etc/hosts file.

Starting Erlang nodes and setting up the Erlang cluster

Starting a whole Erlang cluster can be done very easily once you can connect with SSH to all cluster node without being prompt for a password.

Starting the Erlang master node

Erlang needs to be started with the -rsh ssh parameters to use ssh connection to the target nodes within the slave command, instead of rsh connection. It also need to be started with network enable with the -sname node parameter.

Here is an example Erlang command to start the Erlang master node:

erl -rsh ssh -sname clustmaster

Be carefull, your master node short name has to be sufficent to route from the slave nodes in the cluster to your master node. The slave:start timeout if it cannot connect back from the slave to your master node.

Starting the slave nodes (cluster)

The custom function cluster:slaves/1 is a wrapper to the Erlang slave function. It allows to easily start a set of Erlang node on target hosts with the same cookie.

-module(cluster).

-export([slaves/1]).

%% Argument:

%% Hosts: List of hostname (string)

slaves([]) ->

ok;

slaves([Host|Hosts]) ->

Args = erl_system_args(),

NodeName = "cluster",

{ok, Node} = slave:start_link(Host, NodeName, Args),

io:format("Erlang node started = [~p]~n", [Node]),

slaves(Hosts).

erl_system_args()->

Shared = case init:get_argument(shared) of

error -> " ";

{ok,[[]]} -> " -shared "

end,

lists:append(["-rsh ssh -setcookie",

atom_to_list(erlang:get_cookie()),

Shared, " +Mea r10b "]).

%% Do not forget to start erlang with a command like:

%% erl -rsh ssh -sname clustmaster

Here is a sample session:

mremond@controler:~/cvs/cluster$ erl -rsh ssh -sname demo

Erlang (BEAM) emulator version 5.3 [source] [hipe]

Eshell V5.3 (abort with ^G)

(demo@controler)1> cluster:slaves(["gateway", "yaws1", "yaws2", "mnesia1", "mnesia2", "eddieware"]).

Erlang node started = [cluster@gateway]

Erlang node started = [cluster@yaws1]

Erlang node started = [cluster@yaws2]

Erlang node started = [cluster@mnesia1]

Erlang node started = [cluster@mnesia2]

Erlang node started = [cluster@eddieware]

ok

The order of the nodes in the cluster:slaves/1 list parameter does not matter.

You can check the list of known nodes:

(demo@controler)2> nodes().

[cluster@gateway,

cluster@yaws1,

cluster@yaws2,

cluster@mnesia1,

cluster@mnesia2,

cluster@eddieware]

And you can start executing code on cluster nodes:

(demo@controler)3> rpc:multicall(nodes(), io, format, ["Hello world!~n", []]).

Hello world!

Hello world!

Hello world!

Hello world!

Hello world!

Hello world!

{[ok,ok,ok,ok,ok,ok],[]}

|

If you have trouble with slave start, you can uncomment the line:

%%io:format("Command: ~s~n", [Cmd])

before the open_port instruction:

open_port({spawn, Cmd}, [stream]),

in the slave:wait_for_slave/7 function. |

<?php

header('Content-disposition:attachment;filename=movie.mpg');

header('Content-type:video/mpeg');

readfile('movie.mpg');

?>

socket API原本是為網絡通訊設計的,但后來在socket的框架上發展出一種IPC機制,就是UNIX Domain Socket。雖然網絡socket也可用于同一臺主機的進程間通訊(通過loopback地址127.0.0.1),但是UNIX Domain Socket用于IPC更有效率:不需要經過網絡協議棧,不需要打包拆包、計算校驗和、維護序號和應答等,只是將應用層數據從一個進程拷貝到另一個進程。這是因為,IPC機制本質上是可靠的通訊,而網絡協議是為不可靠的通訊設計的。UNIX Domain Socket也提供面向流和面向數據包兩種API接口,類似于TCP和UDP,但是面向消息的UNIX Domain Socket也是可靠的,消息既不會丟失也不會順序錯亂。

UNIX Domain Socket是全雙工的,API接口語義豐富,相比其它IPC機制有明顯的優越性,目前已成為使用最廣泛的IPC機制,比如X Window服務器和GUI程序之間就是通過UNIX Domain Socket通訊的。

使用UNIX Domain Socket的過程和網絡socket十分相似,也要先調用socket()創建一個socket文件描述符,address family指定為AF_UNIX,type可以選擇SOCK_DGRAM或SOCK_STREAM,protocol參數仍然指定為0即可。

UNIX Domain Socket與網絡socket編程最明顯的不同在于地址格式不同,用結構體sockaddr_un表示,網絡編程的socket地址是IP地址加端口號,而UNIX Domain Socket的地址是一個socket類型的文件在文件系統中的路徑,這個socket文件由bind()調用創建,如果調用bind()時該文件已存在,則bind()錯誤返回。

以下程序將UNIX Domain socket綁定到一個地址。

#include <stdlib.h>

#include <stdio.h>

#include <stddef.h>

#include <sys/socket.h>

#include <sys/un.h>

int main(void)

{

int fd, size;

struct sockaddr_un un;

memset(&un, 0, sizeof(un));

un.sun_family = AF_UNIX;

strcpy(un.sun_path, "foo.socket");

if ((fd = socket(AF_UNIX, SOCK_STREAM, 0)) < 0) {

perror("socket error");

exit(1);

}

size = offsetof(struct sockaddr_un, sun_path) + strlen(un.sun_path);

if (bind(fd, (struct sockaddr *)&un, size) < 0) {

perror("bind error");

exit(1);

}

printf("UNIX domain socket bound\n");

exit(0);

}

注意程序中的offsetof宏,它在stddef.h頭文件中定義:

#define offsetof(TYPE, MEMBER) ((int)&((TYPE *)0)->MEMBER)

offsetof(struct sockaddr_un, sun_path)就是取sockaddr_un結構體的sun_path成員在結構體中的偏移,也就是從結構體的第幾個字節開始是sun_path成員。想一想,這個宏是如何實現這一功能的?

該程序的運行結果如下。

$ ./a.out

UNIX domain socket bound

$ ls -l foo.socket

srwxrwxr-x 1 user 0 Aug 22 12:43 foo.socket

$ ./a.out

bind error: Address already in use

$ rm foo.socket

$ ./a.out

UNIX domain socket bound

以下是服務器的listen模塊,與網絡socket編程類似,在bind之后要listen,表示通過bind的地址(也就是socket文件)提供服務。

#include <stddef.h>

#include <sys/socket.h>

#include <sys/un.h>

#include <errno.h>

#define QLEN 10

/*

* Create a server endpoint of a connection.

* Returns fd if all OK, <0 on error.

*/

int serv_listen(const char *name)

{

int fd, len, err, rval;

struct sockaddr_un un;

/* create a UNIX domain stream socket */

if ((fd = socket(AF_UNIX, SOCK_STREAM, 0)) < 0)

return(-1);

unlink(name); /* in case it already exists */

/* fill in socket address structure */

memset(&un, 0, sizeof(un));

un.sun_family = AF_UNIX;

strcpy(un.sun_path, name);

len = offsetof(struct sockaddr_un, sun_path) + strlen(name);

/* bind the name to the descriptor */

if (bind(fd, (struct sockaddr *)&un, len) < 0) {

rval = -2;

goto errout;

}

if (listen(fd, QLEN) < 0) { /* tell kernel we're a server */

rval = -3;

goto errout;

}

return(fd);

errout:

err = errno;

close(fd);

errno = err;

return(rval);

}

以下是服務器的accept模塊,通過accept得到客戶端地址也應該是一個socket文件,如果不是socket文件就返回錯誤碼,如果是socket文件,在建立連接后這個文件就沒有用了,調用unlink把它刪掉,通過傳出參數uidptr返回客戶端程序的user id。

#include <stddef.h>

#include <sys/stat.h>

#include <sys/socket.h>

#include <sys/un.h>

#include <errno.h>

int serv_accept(int listenfd, uid_t *uidptr)

{

int clifd, len, err, rval;

time_t staletime;

struct sockaddr_un un;

struct stat statbuf;

len = sizeof(un);

if ((clifd = accept(listenfd, (struct sockaddr *)&un, &len)) < 0)

return(-1); /* often errno=EINTR, if signal caught */

/* obtain the client's uid from its calling address */

len -= offsetof(struct sockaddr_un, sun_path); /* len of pathname */

un.sun_path[len] = 0; /* null terminate */

if (stat(un.sun_path, &statbuf) < 0) {

rval = -2;

goto errout;

}

if (S_ISSOCK(statbuf.st_mode) == 0) {

rval = -3; /* not a socket */

goto errout;

}

if (uidptr != NULL)

*uidptr = statbuf.st_uid; /* return uid of caller */

unlink(un.sun_path); /* we're done with pathname now */

return(clifd);

errout:

err = errno;

close(clifd);

errno = err;

return(rval);

}

以下是客戶端的connect模塊,與網絡socket編程不同的是,UNIX Domain Socket客戶端一般要顯式調用bind函數,而不依賴系統自動分配的地址。客戶端bind一個自己指定的socket文件名的好處是,該文件名可以包含客戶端的pid以便服務器區分不同的客戶端。

#include <stdio.h>

#include <stddef.h>

#include <sys/stat.h>

#include <sys/socket.h>

#include <sys/un.h>

#include <errno.h>

#define CLI_PATH "/var/tmp/" /* +5 for pid = 14 chars */

/*

* Create a client endpoint and connect to a server.

* Returns fd if all OK, <0 on error.

*/

int cli_conn(const char *name)

{

int fd, len, err, rval;

struct sockaddr_un un;

/* create a UNIX domain stream socket */

if ((fd = socket(AF_UNIX, SOCK_STREAM, 0)) < 0)

return(-1);

/* fill socket address structure with our address */

memset(&un, 0, sizeof(un));

un.sun_family = AF_UNIX;

sprintf(un.sun_path, "%s%05d", CLI_PATH, getpid());

len = offsetof(struct sockaddr_un, sun_path) + strlen(un.sun_path);

unlink(un.sun_path); /* in case it already exists */

if (bind(fd, (struct sockaddr *)&un, len) < 0) {

rval = -2;

goto errout;

}

/* fill socket address structure with server's address */

memset(&un, 0, sizeof(un));

un.sun_family = AF_UNIX;

strcpy(un.sun_path, name);

len = offsetof(struct sockaddr_un, sun_path) + strlen(name);

if (connect(fd, (struct sockaddr *)&un, len) < 0) {

rval = -4;

goto errout;

}

return(fd);

errout:

err = errno;

close(fd);

errno = err;

return(rval);

}

我們介紹了nginx這個輕量級的高性能server主要可以干的兩件事情:

>直接作為http server(代替apache,對PHP需要FastCGI處理器支持,這個我們之后介紹);

>另外一個功能就是作為反向代理服務器實現負載均衡 (如下我們就來舉例說明實際中如何使用nginx實現負載均衡)。因為nginx在處理并發方面的優勢,現在這個應用非常常見。當然了Apache的mod_proxy和mod_cache結合使用也可以實現對多臺app server的反向代理和負載均衡,但是在并發處理方面apache還是沒有nginx擅長。

nginx作為反向代理實現負載均衡的例子:

1)環境:

a. 我們本地是Windows系統,然后使用VirutalBox安裝一個虛擬的Linux系統。

在本地的Windows系統上分別安裝nginx(偵聽8080端口)和apache(偵聽80端口)。在虛擬的Linux系統上安裝apache(偵聽80端口)。

這樣我們相當于擁有了1臺nginx在前端作為反向代理服務器;后面有2臺apache作為應用程序服務器(可以看作是小型的server cluster。;-) );

b. nginx用來作為反向代理服務器,放置到兩臺apache之前,作為用戶訪問的入口;

nginx僅僅處理靜態頁面,動態的頁面(php請求)統統都交付給后臺的兩臺apache來處理。

也就是說,可以把我們網站的靜態頁面或者文件放置到nginx的目錄下;動態的頁面和數據庫訪問都保留到后臺的apache服務器上。

c. 如下介紹兩種方法實現server cluster的負載均衡。

我們假設前端nginx(為127.0.0.1:80)僅僅包含一個靜態頁面index.html;

后臺的兩個apache服務器(分別為localhost:80和158.37.70.143:80),一臺根目錄放置phpMyAdmin文件夾和test.php(里面測試代碼為print "server1";),另一臺根目錄僅僅放置一個test.php(里面測試代碼為print "server2";)。

2)針對不同請求 的負載均衡:

a. 在最簡單地構建反向代理的時候 (nginx僅僅處理靜態不處理動態內容,動態內容交給后臺的apache server來處理),我們具體的設置為:在nginx.conf中修改:

location ~ \.php$ {

proxy_pass 158.37.70.143:80 ;

}

>這樣當客戶端訪問localhost:8080/index.html的時候,前端的nginx會自動進行響應;

>當用戶訪問localhost:8080/test.php的時候(這個時候nginx目錄下根本就沒有該文件),但是通過上面的設置location ~ \.php$(表示正則表達式匹配以.php結尾的文件,詳情參看location是如何定義和匹配的 http://wiki.nginx.org/NginxHttpCoreModule) ,nginx服務器會自動pass給158.37.70.143的apache服務器了。該服務器下的test.php就會被自動解析,然后將html的結果頁面返回給nginx,然后nginx進行顯示(如果nginx使用memcached模塊或者squid還可以支持緩存),輸出結果為打印server2。

如上是最為簡單的使用nginx做為反向代理服務器的例子;

b. 我們現在對如上例子進行擴展,使其支持如上的兩臺服務器。

我們設置nginx.conf的server模塊部分,將對應部分修改為:

location ^~ /phpMyAdmin/ {

proxy_pass 127.0.0.1:80 ;

}

location ~ \.php$ {

proxy_pass 158.37.70.143:80 ;

}

上面第一個部分location ^~ /phpMyAdmin/,表示不使用正則表達式匹配(^~),而是直接匹配,也就是如果客戶端訪問的URL是以 http://localhost:8080/phpMyAdmin/ 開頭的話(本地的nginx目錄下根本沒有phpMyAdmin目錄),nginx會自動pass到 127.0.0.1:80 的Apache服務器,該服務器對phpMyAdmin目錄下的頁面進行解析,然后將結果發送給nginx,后者顯示;

如果客戶端訪問URL是 http://localhost/test.php 的話,則會被pass到 158.37.70.143:80 的apache進行處理。

因此綜上,我們實現了針對不同請求的負載均衡。

>如果用戶訪問靜態頁面index.html,最前端的nginx直接進行響應;

>如果用戶訪問test.php頁面的話, 158.37.70.143:80 的Apache進行響應;

>如果用戶訪問目錄phpMyAdmin下的頁面的話, 127.0.0.1:80 的Apache進行響應;

3)訪問同一頁面 的負載均衡:

即 用戶訪問http://localhost:8080/test.php 這個同一頁面的時候,我們實現兩臺服務器的負載均衡 (實際情況中,這兩個服務器上的數據要求同步一致,這里我們分別定義了打印server1和server2是為了進行辨認區別)。

a. 現在我們的情況是在windows下nginx是localhost偵聽8080端口;

兩臺apache,一臺是127.0.0.1:80(包含test.php頁面但是打印server1),另一臺是虛擬機的158.37.70.143:80(包含test.php頁面但是打印server2)。

b. 因此重新配置nginx.conf為:

>首先 在nginx的配置文件nginx.conf的http模塊中添加,服務器集群server cluster(我們這里是兩臺)的定義:

upstream myCluster {

server 127.0.0.1:80 ;

server 158.37.70.143:80 ;

}

表示這個server cluster包含2臺服務器

>然后在server模塊中定義,負載均衡:

location ~ \.php$ {

proxy_pass http://myCluster ; #這里的名字和上面的cluster的名字相同

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

這樣的話,如果訪問 http://localhost:8080/test.php 頁面的話,nginx目錄下根本沒有該文件,但是它會自動將其pass到myCluster定義的服務區機群中,分別由127.0.0.1:80;或者158.37.70.143:80;來做處理。

上面在定義upstream的時候每個server之后沒有定義權重,表示兩者均衡;如果希望某個更多響應的話例如:

upstream myCluster {

server 127.0.0.1:80 weight=5;

server 158.37.70.143:80 ;

}

這樣表示5/6的幾率訪問第一個server,1/6訪問第二個。另外還可以定義max_fails和fail_timeout等參數。

http://wiki.nginx.org/NginxHttpUpstreamModule

====================

綜上,我們 使用nginx的反向代理服務器reverse proxy server的功能,將其布置到多臺apache server的前端。

nginx僅僅用來處理靜態頁面響應和動態請求的代理pass,后臺的apache server作為app server來對前臺pass過來的動態頁面進行處理并返回給nginx。

通過以上的架構,我們可以實現nginx和多臺apache構成的機群cluster的負載均衡。

兩種均衡:

1)可以在nginx中定義訪問不同的內容,代理到不同的后臺server; 如上例子中的訪問phpMyAdmin目錄代理到第一臺server上;訪問test.php代理到第二臺server上;

2)可以在nginx中定義訪問同一頁面,均衡 (當然如果服務器性能不同可以定義權重來均衡) 地代理到不同的后臺server上。 如上的例子訪問test.php頁面,會均衡地代理到server1或者server2上。

實際應用中,server1和server2上分別保留相同的app程序和數據,需要考慮兩者的數據同步。

很早之前在Infoq上看到Heroku的介紹,不過當時這個網站并沒有推出,今天在整理收藏夾的時候發現,Heroku已經推出一段時間,而且現在作為云計算平臺已經有很快的發展了。

Heroku是Rails應用最簡單的部署平臺。只是簡單的把代碼放進去,然后啟動、運行,沒人會做不到這些。Heroku會處理一切,從版本控制到 自動伸縮的協作(基于Amazon的EC2之上)。我們提供一整套工具來開發和管理應用,不管是通過Web接口還是新的擴展API。

HeroKu的架構大部分是采用開源的架構來實現的,:)其實構建云計算平臺,開源的世界已經解決一切了,不是嗎?下面看看HeroKu的架構圖,非常漂亮:

一、反向代理服務器采用Nigix

Nigix是一個開源的,高性能的web server和支持IMAP/POP3代理的反向代理服務器,Nigix不采用多線程的方式來支持大并發處理,而是采用了一個可擴展的Event-Driven(信號asynchronous)的網絡模型來實現,解決了著名的C10K問題。

Nigix在這里用來解決Http Level的問題,包括SSL的處理,Http請求中轉,Gzip的傳輸壓縮等等處理,同時應用了多個前端的Nigix 服務器來解決DNS及負載均衡的問題。

二、Http Cache采用Varnish

Varnish is a state-of-the-art, high-performance HTTP accelerator. It uses the advanced features in Linux 2.6, FreeBSD 6/7 and Solaris 10 to achieve its high performance.

Varnish在這里主要給采用來處理靜態資源,包括對頁面的靜態化處理,圖片,CSS等等,這里請求獲取不到的再通過下一層的Routing Mess去獲取。通常還有另外一個選擇Squid,不過近幾年來,Varnish 給大型網站應用的更加的多了。

三、動態路由處理層,這里采用了Erlang 實現的,是由該團隊自己實現的,Erlang 提供了高可靠性和穩定性的服務端實現能力(其實,我們也可以這樣去使用),這個層主要是解決路由尋址的問題,通過合理分配動態過來的請求,跟蹤請求的負載能力,并合理的分配可獲取的下一層app 服務。這個層實現了對業務app的可擴展性和容錯性,可以根據下一層服務的負載容量來合理進行路由的選擇。原理上它是一個分布式的動態HTTP請求的路由池子。

四、動態網格層,用戶部署的app是部署在這一層,可以看成是一個服務器集群,只是粒度會更加的細小。

五、數據庫層,這里不用多說了

六、Memory Cache

也不需要多說,現在大部分互聯網公司都在應用,而且基于它開發了很多好的連接器,我們公司其實也有在采用,不過我們還有自己開發的分布式內存系統,如原來的TTC Server,現在好像叫WorkBench。

|