Binary search algorithm

Generally, to find a value in unsorted array, we should look through elements of an array one by one, until searched value is found. In case of searched value is absent from array, we go through all elements. In average, complexity of such an algorithm is proportional to the length of the array.

Situation changes significantly, when array is sorted. If we know it, random access capability can be utilized very efficientlyto find searched value quick. Cost of searching algorithm reduces to binary logarithm of the array length. For reference, log2(1 000 000) ‚â?20. It means, that in worst case, algorithm makes 20 steps to find a value in sorted array of a million elements or to say, that it doesn't present it the array.

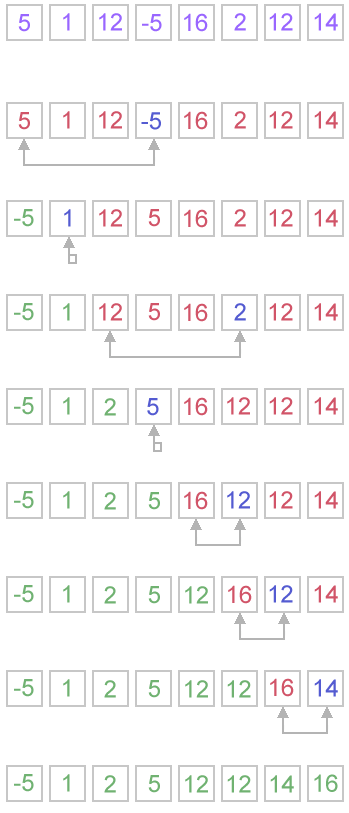

Algorithm

Algorithm is quite simple. It can be done either recursively or iteratively:

- get the middle element;

- if the middle element equals to the searched value, the algorithm stops;

- otherwise, two cases are possible:

- searched value is less, than the middle element. In this case, go to the step 1 for the part of the array, before middle element.

- searched value is greater, than the middle element. In this case, go to the step 1 for the part of the array, after middle element.

Examples

Example 1. Find 6 in {-1, 5, 6, 18, 19, 25, 46, 78, 102, 114}.

Step 1 (middle element is 19 > 6): -1 5 6 18 19 25 46 78 102 114

Step 2 (middle element is 5 < 6): -1 5 6 18 19 25 46 78 102 114

Step 3 (middle element is 6 == 6): -1 5 6 18 19 25 46 78 102 114

Example 2. Find 103 in {-1, 5, 6, 18, 19, 25, 46, 78, 102, 114}.

Step 1 (middle element is 19 < 103): -1 5 6 18 19 25 46 78 102 114

Step 2 (middle element is 78 < 103): -1 5 6 18 19 25 46 78 102 114

Step 3 (middle element is 102 < 103): -1 5 6 18 19 25 46 78 102 114

Step 4 (middle element is 114 > 103): -1 5 6 18 19 25 46 78 102 114

Step 5 (searched value is absent): -1 5 6 18 19 25 46 78 102 114

Complexity analysis

Huge advantage of this algorithm is that it's complexity depends on the array size logarithmically in worst case. In practice it means, that algorithm will do at most log2(n) iterations, which is a very small number even for big arrays. It can be proved very easily. Indeed, on every step the size of the searched part is reduced by half. Algorithm stops, when there are no elements to search in. Therefore, solving following inequality in whole numbers:

n / 2iterations > 0

resulting in

iterations <= log2(n).

It means, that binary search algorithm time complexity is O(log2(n)).

Code snippets.

You can see recursive solution for Java and iterative for python below.Java

int binarySearch(int[] array, int value, int left, int right) {

if (left > right)

return -1;

int middle = left + (right-left) / 2;

if (array[middle] == value)

return middle;

if (array[middle] > value)

return binarySearch(array, value, left, middle - 1);

else

return binarySearch(array, value, middle + 1, right);

}

Python

def biSearch(L,e,first,last):

if last - first < 2: return L[first] == e or L[last] == e

mid = first + (last-first)/2

if L[mid] ==e: return True

if L[mid]> e :

return biSearch(L,e,first,mid-1)

return biSearch(L,e,mid+1,last)