�q�行hadoop�E�序�Ӟ�� 中途我把它�l�止了,然后再向hdfs加文件或删除文�g�Ӟ��出现Name node is in safe mode错误�Q?br />rmr: org.apache.hadoop.dfs.SafeModeException: Cannot delete /user/hadoop/input. Name node is in safe mode

解决的命令:

bin/hadoop dfsadmin -safemode leave #关闭safe mode

转自�Q?nbsp;http://shutiao2008.iteye.com/blog/318950

�?安全模式 学习�Q?/p> safemode模式

NameNode在启动的时候首先进入安全模式,如果datanode丢失的block辑ֈ�一定的比例�Q?-dfs.safemode.threshold.pct�Q�,则系�l�会一直处于安全模式状态即只读状态�?br />dfs.safemode.threshold.pct�Q�缺省�?.999f�Q�表�C�HDFS启动的时候,如果DataNode上报的block个数辑ֈ�了元数据记录的block个数�?.999倍才可以���d��安全模式�Q�否则一直是�q�种只读模式。如果设�?则HDFS永远是处于SafeMode�?br />下面�q�行摘录自NameNode启动时的日志�Q�block上报比例1辑ֈ�了阀�?.9990�Q?br />The ratio of reported blocks 1.0000 has reached the threshold 0.9990. Safe mode will be turned off automatically in 18 seconds.

hadoop dfsadmin -safemode leave

有两个方法离开�q�种安全模式

1. 修改dfs.safemode.threshold.pct��Z��个比较小的��|���~�省�?.999�?br />2. hadoop dfsadmin -safemode leave命��o强制���d��

http://bbs.hadoopor.com/viewthread.php?tid=61&extra=page%3D1

�Q�-�Q�-�Q�-�Q�-�Q�-�Q�-�Q�-�Q�-�Q�-�Q�-�Q�-�Q�-�Q�-�Q�-�Q?br />Safe mode is exited when the minimal replication condition is reached, plus an extension

time of 30 seconds. The minimal replication condition is when 99.9% of the blocks in

the whole filesystem meet their minimum replication level (which defaults to one, and

is set by dfs.replication.min).

安全模式的退出前�?- 整个文�g�pȝ��中的99.9%�Q�默认是99.9%�Q�可以通过dfs.safemode.threshold.pct讄����Q�的Blocks辑ֈ�最���备份���?默认�?�Q�可以通过dfs.replication.min讄���)�?br />dfs.safemode.threshold.pct float 0.999

The proportion of blocks in the system that must meet the minimum

replication level defined by dfs.rep lication.min before the namenode

will exit safe mode. Setting

this value to 0 or less forces the name-node not to start in safe mode.

Setting this value to more than 1 means the namenode never exits safe

mode.

�Q�-�Q�-�Q�-�Q�-�Q�-�Q�-�Q�-�Q�-�Q�-�Q�-�Q�-�Q�-�Q�-�Q�-�Q?br />用户可以通过dfsadmin -safemode value 来操作安全模式,参数value的说明如下:

enter - �q�入安全模式

leave - 强制NameNode���d��安全模式

get - �q�回安全模式是否开启的信息

wait - �{�待�Q�一直到安全模式�l�束�?/div>

]]>

���程如下�Q?/p>

1.下蝲hadoop 1.0.3 �Q�http://hadoop.apache.org/releases.html#Download�Q�,解压在自定义的一个目录中�Q�最好全英文路径�Q�试�q�中文�\径出了问题)�?/p>

2.Eclipse导入..\hadoop-1.0.3\src\contrib\eclipse-plugin��目�Q�默认项目是MapReduceTools�?/p>

3. 在项目MapReduceTools中新建lib目录�Q��ƈ把hadoop的hadoop-core�Q�由hadoop根目录的hadoop-*.jar改名 获得�Q�、commons-cli-1.2.jar、commons-lang-2.4.jar、commons-configuration- 1.6.jar、jackson-mapper-asl-1.8.8.jar、jackson-core-asl-1.8.8.jar、commons- httpclient-3.0.1.jar拯���到该目录�?/p>

4.修改上��目录中的build-contrib.xml�Q?/p>

扑ֈ�<property name="hadoop.root" location="${root}/../../../"/>修改location为hadoop1.0.3实际解压目录�Q�在其下��d��

<property name="eclipse.home" location="D:/Program Files/eclipse"/>

<property name="version" value="http://x-goder.iteye.com/blog/1.0.3"/>

5.修改��目目录下的build.xml�Q?/p>

<target name="jar" depends="compile" unless="skip.contrib">

<mkdir dir="${build.dir}/lib"/>

<copy file="${hadoop.root}/hadoop-core-${version}.jar" tofile="${build.dir}/lib/hadoop-core.jar" verbose="true"/>

<copy file="${hadoop.root}/lib/commons-cli-1.2.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.root}/lib/commons-lang-2.4.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.root}/lib/commons-configuration-1.6.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.root}/lib/jackson-mapper-asl-1.8.8.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.root}/lib/jackson-core-asl-1.8.8.jar" todir="${build.dir}/lib" verbose="true"/>

<copy file="${hadoop.root}/lib/commons-httpclient-3.0.1.jar" todir="${build.dir}/lib" verbose="true"/>

<jar

jarfile="${build.dir}/hadoop-${name}-${version}.jar"

manifest="${root}/META-INF/MANIFEST.MF">

<fileset dir="${build.dir}" includes="classes/ lib/"/>

<fileset dir="${root}" includes="resources/ plugin.xml"/>

</jar>

</target>

6.右键eclipse里的build.xml选择run as - ant build�?/p>

如果出现�Q?#8220;软�g包org.apache.hadoop.fs 不存�?#8221;的错误则修改build.xml�Q?/p>

<path id="hadoop-jars">

<fileset dir="${hadoop.root}/">

<include name="hadoop-*.jar"/>

</fileset>

</path>

�?lt;path id="classpath">中添加:<path refid="hadoop-jars"/>

7.�{�Ant�~�译完毕后。编译后的文件在�Q�\build\contrib 中的 hadoop-eclipse-plugin-1.0.3.jar�?/p>

8.查看�~�译好的jar包下META-INF/MANIFEST.MF 下的配置属性是否完��_��如果不完��_��补充完整�?/p>

,lib/commons-lang-2.4.jar,lib/commons-configuration-1.6.jar,lib/jacks

on-mapper-asl-1.8.8.jar,lib/jackson-core-asl-1.8.8.jar,lib/commons-ht

tpclient-3.0.1.jar

9.攑օ�eclipse/plugins下,重启eclipse�Q�查看是否安装成功�?/p>

]]>

首先我们要在HBase中徏立我们的一个表来存�?span >数据�?nbsp;

- public static void creatTable(String table) throws IOException{

- HConnection conn = HConnectionManager.getConnection(conf);

- HBaseAdmin admin = new HBaseAdmin(conf);

- if(!admin.tableExists(new Text(table))){

- System.out.println("1. " + table + " table creating ... please wait");

- HTableDescriptor tableDesc = new HTableDescriptor(table);

- tableDesc.addFamily(new HColumnDescriptor("http:"));

- tableDesc.addFamily(new HColumnDescriptor("url:"));

- tableDesc.addFamily(new HColumnDescriptor("referrer:"));

- admin.createTable(tableDesc);

- } else {

- System.out.println("1. " + table + " table already exists.");

- }

- System.out.println("2. access_log files fetching using map/reduce");

- }

然后我们�q�行一个MapReduce��d��来取得log中的每一�?数据。因为我们只要取得数据而不需要对�l�果�q�行规约�Q�我们只要编写一个Map�E�序卛_���?nbsp;

- public static class MapClass extends MapReduceBase implements

- Mapper<WritableComparable, Text, Text, Writable> {

-

- @Override

- public void configure(JobConf job) {

- tableName = job.get(TABLE, "");

- }

-

- public void map(WritableComparable key, Text value,

- OutputCollector<Text, Writable> output, Reporter reporter)

- throws IOException {

- try {

- AccessLogParser log = new AccessLogParser(value.toString());

- if(table==null)

- table = new HTable(conf, new Text(tableName));

- long lockId = table.startUpdate(new Text(log.getIp()));

- table.put(lockId, new Text("http:protocol"), log.getProtocol().getBytes());

- table.put(lockId, new Text("http:method"), log.getMethod().getBytes());

- table.put(lockId, new Text("http:code"), log.getCode().getBytes());

- table.put(lockId, new Text("http:bytesize"), log.getByteSize().getBytes());

- table.put(lockId, new Text("http:agent"), log.getAgent().getBytes());

- table.put(lockId, new Text("url:" + log.getUrl()), log.getReferrer().getBytes());

- table.put(lockId, new Text("referrer:" + log.getReferrer()), log.getUrl().getBytes());

-

- table.commit(lockId, log.getTimestamp());

- } catch (ParseException e) {

- e.printStackTrace();

- } catch (Exception e) {

- e.printStackTrace();

- }

- }

- }

我们在Map�E�序中对于传�q�来的每一行先交给AccessLogParser��d��理在AccessLogParser��h��造器中用一个正则表辑ּ�"([^ ]*) ([^ ]*) ([^ ]*) \\[([^]]*)\\] \"([^\"]*)\" " ([^ ]*) ([^ ]*) \"([^\"]*)\" \"([^\"]*)\".*"来匹配每一行的log。接下来我们把这些AccessLogParser处理出来的结果更新到HBase的表中去�Q�好的, 我们的程序写完了。我们要启动一个MapReduce的话我们要对工作�q�行配置�?nbsp;

- public static void runMapReduce(String table,String dir) throws IOException{

- Path tempDir = new Path("log/temp");

- Path InputDir = new Path(dir);

- FileSystem fs = FileSystem.get(conf);

- JobConf jobConf = new JobConf(conf, LogFetcher.class);

- jobConf.setJobName("apache log fetcher");

- jobConf.set(TABLE, table);

- Path[] in = fs.listPaths(InputDir);

- if (fs.isFile(InputDir)) {

- jobConf.setInputPath(InputDir);

- } else {

- for (int i = 0; i < in.length; i++) {

- if (fs.isFile(in[i])) {

- jobConf.addInputPath(in[i]);

- } else {

- Path[] sub = fs.listPaths(in[i]);

- for (int j = 0; j < sub.length; j++) {

- if (fs.isFile(sub[j])) {

- jobConf.addInputPath(sub[j]);

- }

- }

- }

- }

- }

- jobConf.setOutputPath(tempDir);

- jobConf.setMapperClass(MapClass.class);

-

- JobClient client = new JobClient(jobConf);

- ClusterStatus cluster = client.getClusterStatus();

- jobConf.setNumMapTasks(cluster.getMapTasks());

- jobConf.setNumReduceTasks(0);

-

- JobClient.runJob(jobConf);

- fs.delete(tempDir);

- fs.close();

- }

在上面的代码中我们先产生一个jobConf对象�Q�然后设定我们的InputPath和OutputPath�Q�告诉MapReduce我们的Map�c�,�?定我们用多少个Map��d��和Reduce��d���Q�然后我们不��d��提交�l�JobClient�Q�关于MapReduce跟详�l�的资料�?a target="_blank">Hadoop Wiki上�?br /> 下蝲�Q�源码和已编译好�?span >jar文�gexample-src.tgz

例子的运行命令是�Q?br />

bin/hadoop jar examples.jar logfetcher <access_log file or directory> <table_name>

如何�q�行上面的应用程序呢�Q�我们假定解压羃完Hadoop分发包的目录�?HADOOP%

拯���%HADOOP%\contrib\hbase\bin下的文�g�?HADOOP%\bin�?拯���%HADOOP%\contrib\hbase \conf的文件到%HADOOP%\conf�?拯���%HADOOP%\src\contrib\hbase\lib的文件到%HADOOP%\lib �?拯���%HADOOP%\src\contrib\hbase\hadoop-*-hbase.jar的文件到%HADOOP%\lib�?然后�~�辑�?�|�文件hbase-site.xml讑֮�你的hbase.master例子�Q?92.168.2.92:60000。把�q�些文�g分发到运行Hadoop�?机器上去。在regionservers文�g��d��上这些已分发�q�的地址。运行bin/start-hbase.sh命��o启动HBase�Q�把你的 apache log文�g拯���到HDFS的apache-log目录下,�{�启动完成后�q�行下面的命令�?br />

bin/hadoop jar examples.jar logfetcher apache-log apache

讉K��http://localhost:50030/�?看到你的MapReduce��d��的运行情况,讉K��http://localhost:60010/�?看到HBase的运行情��c�?br />

�{��Q务MapReduce完成后访�?a href="http://localhost:60010/hql.jsp" target="_blank">http://localhost:60010/hql.jsp,在Query输入框中输入 SELECT * FROM apache limit=50;。将会看到已�l�插入表中的数据�?

]]>

插�g

话说Hadoop 1.0.2/src/contrib/eclipse-plugin只有插�g的源代码�Q�这里给��Z��个我打包好的对应的Eclipse插�g�Q?br />下蝲地址

下蝲后扔到eclipse/dropins目录下即可,当然eclipse/plugins也是可以的,前者更�����便,推荐�Q�重启Eclipse�Q�即可在透视�?Perspective)中看到Map/Reduce�?/p>

配置

点击蓝色的小象图标,新徏一个Hadoop�q�接�Q?/p>

注意�Q�一定要填写正确�Q�修改了某些端口�Q�以及默认运行的用户名等

具体的设�|�,可见

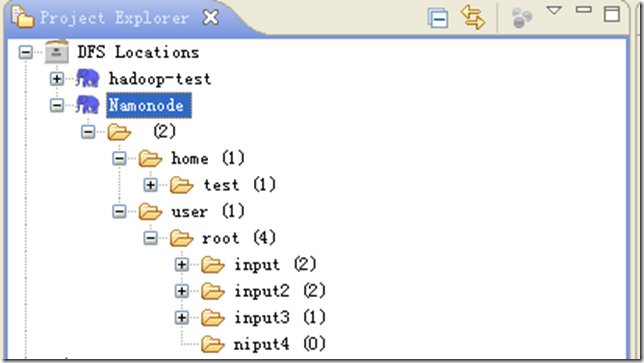

正常情况下,可以在项目区域可以看�?/p>

�q�样可以正常的进行HDFS分布式文件系�l�的���理�Q�上传,删除�{�操作�?/p>

��Z��面测试做准备�Q�需要先��Z��一个目�?user/root/input2�Q�然后上传两个txt文�g到此目录�Q?/p>

intput1.txt 对应内容�Q�Hello Hadoop Goodbye Hadoop

intput2.txt 对应内容�Q�Hello World Bye World

HDFS的准备工作好了,下面可以开始测试了�?/p>

Hadoop工程

新徏一个Map/Reduce Project工程�Q�设定好本地的hadoop目录

新徏一个测试类WordCountTest�Q?/p>

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 |

|

右键�Q�选择“Run Configurations”,弹出�H�口�Q�点�?#8220;Arguments”选项�?�?#8220;Program argumetns”处预先输入参�?

hdfs://master:9000/user/root/input2 dfs://master:9000/user/root/output2

备注�Q�参��Cؓ了在本地调试使用�Q�而非真实环境�?/p>

然后�Q�点�?#8220;Apply”�Q�然�?#8220;Close”。现在可以右键,选择“Run on Hadoop”�Q�运行�?/p>

但此时会出现�c�M��异常信息�Q?/p>

12/04/24 15:32:44 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

12/04/24 15:32:44 ERROR security.UserGroupInformation: PriviledgedActionException as:Administrator cause:java.io.IOException: Failed to set permissions of path: \tmp\hadoop-Administrator\mapred\staging\Administrator-519341271\.staging to 0700

Exception in thread "main" java.io.IOException: Failed to set permissions of path: \tmp\hadoop-Administrator\mapred\staging\Administrator-519341271\.staging to 0700

at org.apache.hadoop.fs.FileUtil.checkReturnValue(FileUtil.java:682)

at org.apache.hadoop.fs.FileUtil.setPermission(FileUtil.java:655)

at org.apache.hadoop.fs.RawLocalFileSystem.setPermission(RawLocalFileSystem.java:509)

at org.apache.hadoop.fs.RawLocalFileSystem.mkdirs(RawLocalFileSystem.java:344)

at org.apache.hadoop.fs.FilterFileSystem.mkdirs(FilterFileSystem.java:189)

at org.apache.hadoop.mapreduce.JobSubmissionFiles.getStagingDir(JobSubmissionFiles.java:116)

at org.apache.hadoop.mapred.JobClient$2.run(JobClient.java:856)

at org.apache.hadoop.mapred.JobClient$2.run(JobClient.java:850)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:396)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1093)

at org.apache.hadoop.mapred.JobClient.submitJobInternal(JobClient.java:850)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:500)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:530)

at com.hadoop.learn.test.WordCountTest.main(WordCountTest.java:85)

�q�个是Windows下文件权限问题,在Linux下可以正常运行,不存在这��L��问题�?/p>

解决�Ҏ��是,修改/hadoop-1.0.2/src/core/org/apache/hadoop/fs/FileUtil.java里面的checkReturnValue�Q�注释掉卛_���Q�有些粗��_��在Window下,可以不用���查)�Q?/p>

| 1 2 3 4 5 6 7 8 9 10 11 12 13 |

|

重新�~�译打包hadoop-core-1.0.2.jar�Q�替换掉hadoop-1.0.2根目录下的hadoop-core-1.0.2.jar卛_���?/p>

�q�里提供一份修改版�?a target="_blank">hadoop-core-1.0.2-modified.jar文�g�Q�替换原hadoop-core-1.0.2.jar卛_���?/p>

替换之后�Q�刷新项目,讄���好正���的jar包依赖,现在再运行WordCountTest�Q�即可�?/p>

成功之后�Q�在Eclipse下刷新HDFS目录�Q�可以看到生成了ouput2目录�Q?/p>

点击“ part-r-00000”文�g�Q�可以看到排序结果:

Bye 1

Goodbye 1

Hadoop 2

Hello 2

World 2

嗯,一样可以正常Debug调试该程序,讄���断点�Q�右�?–> Debug As – > Java Application�Q�,卛_���Q�每�ơ运行之前,都需要收到删除输出目录)�?/p>

另外�Q�该插�g会在eclipse对应的workspace\.metadata\.plugins\org.apache.hadoop.eclipse下,自动生成jar文�g�Q�以及其他文�Ӟ��包括Haoop的一些具体配�|�等�?/p>

嗯,更多�l�节�Q�慢慢体验吧�?/p>

遇到的异�?/strong>

org.apache.hadoop.ipc.RemoteException: org.apache.hadoop.hdfs.server.namenode.SafeModeException: Cannot create directory /user/root/output2/_temporary. Name node is in safe mode.

The ratio of reported blocks 0.5000 has not reached the threshold 0.9990. Safe mode will be turned off automatically.

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInternal(FSNamesystem.java:2055)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:2029)

at org.apache.hadoop.hdfs.server.namenode.NameNode.mkdirs(NameNode.java:817)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:563)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:1388)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:1384)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:396)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1093)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:1382)

在主节点处,关闭掉安全模式:

#bin/hadoop dfsadmin –safemode leave

如何打包

���创建的Map/Reduce��目打包成jar包,很简单的事情�Q�无需多言。保证jar文�g的META-INF/MANIFEST.MF文�g中存在Main-Class映射�Q?/p>

Main-Class: com.hadoop.learn.test.TestDriver

若��用到�W�三方jar包,那么在MANIFEST.MF中增加Class-Path好了�?/p>

另外可��用插件提供的MapReduce Driver向导�Q�可以帮忙我们在Hadoop中运行,直接指定别名�Q�尤其是包含多个Map/Reduce作业�Ӟ��很有用�?/p>

一个MapReduce Driver只要包含一个main函数�Q�指定别名:

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

|

�q�里有一个小技巧,MapReduce Driver�c�M��面,右键�q�行�Q�Run on Hadoop�Q�会在Eclipse的workspace\.metadata\.plugins\org.apache.hadoop.eclipse�?录下自动生成jar包,上传到HDFS�Q�或者远�E�hadoop根目录下�Q�运行它:

# bin/hadoop jar LearnHadoop_TestDriver.java-460881982912511899.jar testcount input2 output3

OK�Q�本文结束�?/p>

]]>

java.lang.RuntimeException: Error while running command to get file permissions : java.io.IOException: Cannot run program "ls": CreateProcess error=2,

java.lang.RuntimeException: Error while running command to get file permissions : java.io.IOException: Cannot run program "ls": CreateProcess error=2, 解决办法是将��d��发到�q�程��L���Q�通常是LINUX上运行,在hbase-site.xml中加入:

<property>

<property> <name>mapred.job.tracker</name>

<name>mapred.job.tracker</name> <value>master:9001</value>

<value>master:9001</value> </property>

</property>同时需把HDFS的权限机制关掉:

<property>

<property> <name>dfs.permissions</name>

<name>dfs.permissions</name> <value>false</value>

<value>false</value> </property>

</property>另外�׃��是在�q�程上执行�Q务,自定义的�c�L���Ӟ��如Maper/Reducer�{�需打包成jar文�g上传�Q�具体见�Ҏ���Q?br />Hadoop作业提交分析�Q�五�Q?a target="_blank">http://www.cnblogs.com/spork/archive/2010/04/21/1717592.html

研究了好几天�Q�终于搞清楚�Q�CONFIGUARATION���是JOB的配�|�信息,�q�程JOBTRACKER���是以此为参数构建JOB��L��行,�׃���q�程��L���q�没有自定义的MAPREDUCE�c�,需打成JAR包后�Q�上传到��L��处,但无需每次都手动传�Q�可以代码设�|�:

conf.set("tmpjars", "d:/aaa.jar");

conf.set("tmpjars", "d:/aaa.jar");另注意,如果在WINDOWS�pȝ��中,文�g分隔��h��“�Q?#8221;�Q�生成的JAR包信息是�?#8220;�Q?#8221;间隔的,在远�E�主机的LINUX上是无法辨别�Q�需改�ؓ�Q?br />

System.setProperty("path.separator", ":");

System.setProperty("path.separator", ":");参考文章:

http://www.cnblogs.com/xia520pi/archive/2012/05/20/2510723.html

使用hadoop eclipse plugin提交Job�q�添加多个第三方jar�Q�完���版�Q?br />http://heipark.iteye.com/blog/1171923

]]>