Two-phase commit(http://en.wikipedia.org/wiki/Two-phase_commit_protocol)是分布式事務最基礎的協議,Three-phase commit(http://en.wikipedia.org/wiki/Three-phase_commit_protocol)主要解決Two-phase commit中協調者宕機問題。

Two-phase commit的算法實現 (from <<Distributed System: Principles and Paradigms>>):

協調者(Coordinator):

write START_2PC to local log;

multicast VOTE_REQUEST to all participants;

while not all votes have been collected {

wait for any incoming vote;

if timeout {

write GLOBAL_ABORT to local log;

multicast GLOBAL_ABORT to all participants;

exit;

}

record vote;

}

if all participants sent VOTE_COMMIT and coordinator votes COMMIT {

write GLOBAL_COMMIT to local log;

multicast GLOBAL_COMMIT to all participants;

} else {

write GLOBAL_ABORT to local log;

multicast GLOBAL_ABORT to all participants;

}

參與者(Participants)

write INIT to local log;

wait for VOTE_REQUEST from coordinator;

if timeout {

write VOTE_ABORT to local log;

exit;

}

if participant votes COMMIT {

write VOTE_COMMIT to local log;

send VOTE_COMMIT to coordinator;

wait for DECISION from coordinator;

if timeout {

multicast DECISION_REQUEST to other participants;

wait until DECISION is received; /* remain blocked*/

write DECISION to local log;

}

if DECISION == GLOBAL_COMMIT

write GLOBAL_COMMIT to local log;

else if DECISION == GLOBAL_ABORT

write GLOBAL_ABORT to local log;

} else {

write VOTE_ABORT to local log;

send VOTE_ABORT to coordinator;

}

另外,每個參與者維護一個線程專門處理其它參與者的DECISION_REQUEST請求,處理線程流程如下:

while true {

wait until any incoming DECISION_REQUEST is received;

read most recently recorded STATE from the local log;

if STATE == GLOBAL_COMMIT

send GLOBAL_COMMIT to requesting participant;

else if STATE == INIT or STATE == GLOBAL_ABORT;

send GLOBAL_ABORT to requesting participant;

else

skip; /* participant remains blocked */

}

從上述的協調者與參與者的流程可以看出,如果所有參與者VOTE_COMMIT后協調者宕機,這個時候每個參與者都無法單獨決定全局事務的最終結果(GLOBAL_COMMIT還是GLOBAL_ABORT),也無法從其它參與者獲取,整個事務一直阻塞到協調者恢復;如果協調者出現類似磁盤壞這種永久性錯誤,該事務將成為被永久遺棄的孤兒。問題的解決有如下思路:

1. 協調者持久化數據定期備份。為了防止協調者出現永久性錯誤,這是一種代價最小的解決方法,不容易引入bug,但是事務被阻塞的時間可能特別長,比較適合銀行這種正確性高于一切的系統。

2. Three-phase Commit。這是理論上的一種方法,實現起來復雜且效率低。思路如下:假設參與者機器不可能出現超過一半同時宕機的情況,如果協調者宕機,我們需要從活著的超過一半的參與者中得出事務的全局結果。由于不可能知道已經宕機的參與者的狀態,所以引入一個新的參與者狀態PRECOMMIT,參與者成功執行一個事務需要經過INIT, READY, PRECOMMIT,最后到COMMIT狀態;如果至少有一個參與者處于PRECOMMIT或者COMMIT,事務成功;如果至少一個參與者處于INIT或者ABORT,事務失敗;如果所有的參與者都處于READY(至少一半參與者活著),事務失敗,即使原先宕機的參與者恢復后處于PRECOMMIT狀態,也會因為有其它參與者處于ABORT狀態而回滾。PRECOMMIT狀態的引入給了宕機的參與者回滾機會,所以Three-phase commit在超過一半的參與者活著的時候是不阻塞的。不過,Three-phase Commit只能算是是理論上的探索,效率低并且沒有解決網絡分區問題。

3. Paxos解決協調者單點問題。Jim Gray和Lamport合作了一篇論文講這個方法,很適合互聯網公司的超大規模集群,Google的Megastore事務就是這樣實現的,不過問題在于Paxos和Two-phase Commit都不簡單,需要有比較靠譜(代碼質量高)的小團隊設計和編碼才行。后續的blog將詳細闡述該方法。

總之,分布式事務只能是系統開發者的烏托邦式理想,Two-phase commit的介入將導致涉及多臺機器的事務之間完全串行,沒有代價的分布式事務是不存在的。

前面我的一篇文章http://hi.baidu.com/knuthocean/blog/item/12bb9f3dea0e400abba1673c.html引用了對Google App Engine工程師關于Bigtable/Megastore replication的文章。當時留下了很多疑問,比如:為什么Google Bigtable 是按照column family級別而不是按行執行replication的?今天重新思考了Bigtable replication問題,有如下體會:

1. Bigtable/GFS的設計屬于分層設計,和文件系統/數據庫分層設計原理一致,通過系統隔離解決工程上的問題。這種分層設計帶來了兩個問題,一個是性能問題,另外一個就是Replication問題。由于存儲節點和服務節點可能不在一臺機器,理論上總是存在性能問題,這就要求我們在加載/遷移Bigtable子表(Bigtable tablet)的時候考慮本地化因素;另外,GFS有自己的replication機制保證存儲的可靠性,Bigtable通過分離服務節點和存儲節點獲得了很大的靈活性,且Bigtable的宕機恢復時間可以做到很短。對于很多對實時性要求不是特別高的應用Bigtable由于服務節點同時只有一個,既節約資源又避免了單點問題。然后,Bigtable tablet服務過于靈活導致replication做起來極其困難。比如,tablet的分裂和合并機制導致多個tablet(一個只寫,其它只讀)服務同一段范圍的數據變得幾乎不可能。

2. Google replication分為兩種機制,基于客戶端和基于Tablet Server。分述如下:

2-1). 基于客戶端的replication。這種機制比較簡單,實現如下:客戶端讀/寫操作均為異步操作,每個寫操作都嘗試寫兩個Bigtable集群,任何一個寫成功就返回用戶,客戶端維護一個retry list,不斷重試失敗的寫操作。讀操作發到兩個集群,任何一個集群讀取成功均可。然后,這樣做有兩個問題:

a. 客戶端不可靠,可能因為各種問題,包括程序問題退出,retry list丟失導致兩個集群的數據不一致;

b. 多個客戶端并發操作時無法保證順序性。集群A收到的寫操作可能是"DEL item; PUT item";集群B的可能是"PUT item; DEL item"。

2-2). 基于Tablet Server的replication。這種機制實現較為復雜,目的是為了保證讀服務,寫操作的延時仍然可能比較長。兩個集群,一個為主集群,提供讀/寫服務;一個為slave集群,提供只讀服務,兩個集群維持最終一致性。對于一般的讀操作,盡量讀取主集群,如果主集群不可以訪問則讀取slave集群;對于寫操作,首先將寫操作提交到主集群的Tablet Server,主集群的Tablet Server維護slave集群的元數據信息,并維護一個后臺線程不斷地將積攢的用戶表格寫操作提交到slave集群進行日志回放(group commit)。對于一般的tablet遷移,操作邏輯和Bigtable論文中的完全一致;主集群如果發生了機器宕機,則除了回放commit log外,還需要完成宕機的Tablet Server遺留的后臺備份任務。之所以要按照column family級別而不是按行復制,是為了提高壓縮率從而提高備份效率。如果主集群寫操作日志的壓縮率大于備份數據的壓縮率,則可能出現備份不及時,待備份數據越來越多的問題。

假設集群A為主集群,集群B是集群A的備份,集群切換時先停止集群A的寫服務,將集群A余下的備份任務備份到集群B后切換到集群B;如果集群A不可訪問的時間不可預知,可以選擇直接切換到集群B,這樣會帶來一致性問題。且由于Bigtable是按列復制的,最后寫入的一些行的事務性無法保證。不過由于寫操作數據還是保存在集群A的,所以用戶可以知道丟了哪些數據,很多應用可以通過重新執行A集群遺留的寫操作進行災難恢復。Google的App Engine也提供了這種查詢及重做丟失的寫操作的工具。

想法不成熟,有問題聯系:knuthocean@163.com

負載平衡策略

Dynamo的負載平衡取決于如何給每臺機器分配虛擬節點號。由于集群環境的異構性,每臺物理機器包含多個虛擬節點。一般有如下兩種分配節點號的方法:

1. 隨機分配。每臺物理節點加入時根據其配置情況隨機分配S個Token(節點號)。這種方法的負載平衡效果還是不錯的,因為自然界的數據大致是比較隨機的,雖然可能出現某段范圍的數據特別多的情況(如baidu, sina等域名下的網頁特別多),但是只要切分足夠細,即S足夠大,負載還是比較均衡的。這個方法的問題是可控性較差,新節點加入/離開系統時,集群中的原有節點都需要掃描所有的數據從而找出屬于新節點的數據,Merkle Tree也需要全部更新;另外,增量歸檔/備份變得幾乎不可能。

2. 數據范圍等分+隨機分配。為了解決方法1的問題,首先將數據的Hash空間等分為Q = N * S份 (N=機器個數,S=每臺機器的虛擬節點數),然后每臺機器隨機選擇S個分割點作為Token。和方法1一樣,這種方法的負載也比較均衡,且每臺機器都可以對屬于每個范圍的數據維護一個邏輯上的Merkle Tree,新節點加入/離開時只需掃描部分數據進行同步,并更新這部分數據對應的邏輯Merkle Tree,增量歸檔也變得簡單。該方法的一個問題是對機器規模需要做出比較合適的預估,隨著業務量的增長,可能需要重新對數據進行劃分。

不管采用哪種方法,Dynamo的負載平衡效果還是值得擔心的。

客戶端緩存及前后臺任務資源分配

客戶端緩存機器信息可以減少一次在DHT中定位目標機器的網絡交互。由于客戶端數量不可控,這里緩存采用客戶端pull的方式更新,Dynamo中每隔10s或者讀/寫操作發現緩存信息不一致時客戶端更新一次緩存信息。

Dynamo中同步操作、寫操作重試等后臺任務較多,為了不影響正常的讀寫服務,需要對后臺任務能夠使用的資源做出限制。Dynamo中維護一個資源授權系統。該系統將整個機器的資源切分成多個片,監控60s內的磁盤讀寫響應時間,事務超時時間及鎖沖突情況,根據監控信息算出機器負載從而動態調整分配給后臺任務的資源片個數。

Dynamo的優點

1. 設計簡單,組合利用P2P的各種成熟技術,模塊劃分好,代碼復用程度高。

2. 分布式邏輯與單機存儲引擎邏輯基本隔離。很多公司有自己的單機存儲引擎,可以借鑒Dynamo的思想加入分布式功能。

3. NWR策略可以根據應用自由調整,這個思想已經被Google借鑒到其下一代存儲基礎設施中。

4. 設計上天然沒有單點,且基本沒有對系統時鐘一致性的依賴。而在Google的單Master設計中,Master是單點,需要引入復雜的分布式鎖機制來解決,且Lease機制需要對機器間時鐘同步做出假設。

Dynamo的缺陷

1. 負載平衡相比單Master設計較不可控;負載平衡策略一般需要預估機器規模,不能無縫地適應業務動態增長。

2. 系統的擴展性較差。由于增加機器需要給機器分配DHT算法所需的編號,操作復雜度較高,且每臺機器存儲了整個集群的機器信息及數據文件的Merkle Tree信息,機器最大規模只能到幾千臺。

3. 數據一致性問題。多個客戶端的寫操作有順序問題,而在GFS中可以通過只允許Append操作得到一個比較好的一致性模型。

4. 數據存儲不是有序,無法執行Mapreduce;Mapreduce是目前允許機器故障,具有強擴展性的最好的并行計算模型,且有開源的Hadoop可以直接使用,Dynamo由于數據存儲依賴Hash無法直接執行Mapreduce任務。

異常處理

Dynamo中把異常分為兩種類型,臨時性的異常和永久性異常。服務器程序運行時一般通過類似supervise的監控daemon啟動,出現core dump等異常情況時自動重啟。這種異常是臨時性的,其它異常如硬盤報修或機器報廢等由于其持續時間太長,稱之為永久性的。回顧Dynamo的設計,一份數據被寫到N, N+1, ... N+K-1這K臺機器上,如果機器N+i (0 <= i <= K-1)宕機,原本寫入該機器的數據轉移到機器N+K,機器N+K定時ping機器N+i,如果在指定的時間T內N+i重新提供服務,機器N+K將啟動傳輸任務將暫存的數據發送給機器N+i;如果超過了時間T機器N+i還是處于宕機狀態,這種異常被認為是永久性的,這時需要借助Merkle Tree機制進行數據同步。這里的問題在于時間T的選擇,所以Dynamo的開發人員后來干脆把所有程序檢測出來的異常認為是臨時性的,并提供給管理員一個utility工具,用來顯示指定一臺機器永久性下線。由于數據被存儲了K份,一臺機器下線將導致后續的K臺機器出現數據不一致的情況。這是因為原本屬于機器N的數據由于機器下線可能被臨時寫入機器N+1, ... N+K。如果機器N出現永久性異常,后續的K臺機器都需要服務它的部分數據,這時它們都需要選擇冗余機器中較為空閑的一臺進行同步。Merkle Tree同步的原理很簡單,每個非葉子節點對應多個文件,為其所有子節點值組合以后的Hash值,葉子節點對應單個數據文件,為文件內容的Hash值。這樣,任何一個數據文件不匹配都將導致從該文件對應的葉子節點到根節點的所有節點值不同。每臺機器維護K棵Merkle Tree,機器同步時首先傳輸Merkle Tree信息,并且只需要同步從根到葉子的所有節點值均不相同的文件。

讀/寫流程

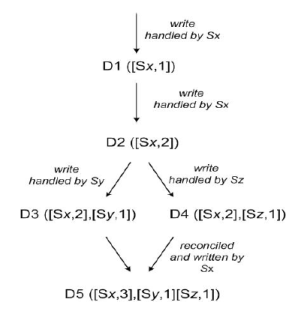

客戶端的讀/寫請求首先傳輸到緩存的一臺機器,根據預先配置的K、W和R值,對于寫請求,根據DHT算法計算出數據所屬的節點后直接寫入后續的K個節點,等到W個節點返回成功時返回客戶端,如果寫請求失敗將加入retry_list不斷重試。如果某臺機器發生了臨時性異常,將數據寫入后續的備用機器并在備用機器中記錄臨時異常的機器信息。對于讀請求,根據DHT算法計算出數據所屬節點后根據負載策略選擇R個節點,從中讀取R份數據,如果數據一致,直接返回客戶端;如果數據不一致,采用vector clock的方法解決沖突。Dynamo系統默認的策略是選擇最新的數據,當然用戶也可以自定義沖突處理方法。每個寫入系統的<key, value>對都記錄一個vector lock信息,vector lock就是一系列<機器節點號, 版本號/時間戳>對,記錄每臺機器對該數據的最新更新版本信息。如下圖:

讀取時進行沖突解決,如果一臺機器讀到的數據的vector lock記錄的所有版本信息都小于另一臺機器,直接返回vector lock較大的數據;如果二者是平行版本,根據時間戳選擇最新的數據或者通過用戶自定義策略解決沖突。讀請求除了返回數據<key, value>值以外還返回vector lock信息,后續的寫操作需要帶上該信息。

問題1:垃圾數據如何回收?

Dynamo的垃圾回收機制主要依賴每個節點上的存儲引擎,如Berkely db存儲引擎,merge-dump存儲引擎等。其它操作,如Merkle Tree同步產生的垃圾文件回收可以和底層存儲引擎配合完成。

問題2:Dynamo有沒有可能丟數據?

關鍵在于K, W, R的設置。假設一個讀敏感應用設置K=3, W=3, R=1,待處理的數據原本屬于節點A, B, C,節點B出現臨時性故障的過程中由節點D代替。在節點B出現故障到節點B同步完成節點D暫存的修改這段時間內,如果讀請求落入節點B或者D都將出現丟數據的問題。這里需要適當處理下,對于B節點下線的情況,由于其它機器要么緩存了B節點已下線信息,要么讀取時將發現B節點處于下線狀態,這是只需要將請求轉發其它節點即可;對于B節點上線情況,可以等到B節點完全同步以后才開始提供讀服務。對于設置W<K的應用,Dynamo讀取時需要解決沖突,可能丟數據。總之,Dynamo中可以保證讀取的機器都是有效的(處于正常服務狀態),但W != K時不保證所有的有效機器均同步了所有更新操作。

問題3:Dynamo的寫入數據有沒有順序問題?

假設要寫入兩條數據"add item"和"delete item",如果寫入的順序不同,將導致完全不同的結果。如果設置W=K,對于同一個客戶端,由于寫入所有的機器以后才返回,可以保證順序;而多個客戶端的寫操作可能被不同的節點處理,不能保證順序性。如果設置W < K,Dynamo不保證順序性。

問題4:沖突解決后是否需要將結果值更新存儲節點?

讀操作解決沖突后不需要將結果值更新存儲節點。產生沖突的情況一般有機器下線或者多個客戶端導致的順序問題。機器下線時retry_list中的操作將丟失,某些節點不能獲取所有的更新操作。對于機器暫時性或者永久性的異常,Dynamo中內部都有同步機制進行處理,但是對于retry_list中的操作丟失或者多個客戶端引發的順序問題,Dynamo內部根本無法分辨數據是否正確。唯一的沖突解決機器在讀操作,Dynamo可以設計成讀操作將沖突解決結果值更新存儲節點,但是這樣會使讀操作變得復雜和不高效。所以,比較好的做法是每個寫操作都帶上讀操作返回的多個版本數據,寫操作將沖突處理的結果更新存儲節點。

樂觀鎖:有時稱作optimistic concurrency control, 指并發控制的時候“樂觀”地認為沖突的概率很小,萬一發生了沖突再重試。具體表現為事務執行過程中不鎖住其它事務,等到事務提交的時候看一下是否發生了沖突,如果沖突則重試或回滾,否則提交事務。

悲觀鎖:并發控制的時候總是很悲觀,事務執行過程中鎖住其它事務,事務提交時不會有沖突。

從表面上看,悲觀鎖比較符合計算機基礎課上灌輸的思維,然而,在分布式系統環境下,異常是常有的事。假設分布式系統采用悲觀鎖設計,如果客戶端發出事務(加鎖)請求后異常退出,將導致系統被永久鎖住。Web應用存儲系統一般采用樂觀鎖設計,這和Web應用的讀/寫比例一般超過10相關。系統設計的時候面臨這樣一種CAS(Compare-And-Swap)需求:如果待操作項符合某個條件則修改。我們可以采用悲觀鎖鎖住待操作項的所有修改,再加上鎖的最大持有時間限制,但這樣的API設計風險很大,樂觀鎖可以很好地解決該問題。

coarse-grained vs fine-grained:粗粒度和細粒度。J2EE中常用來指API的粒度,比如, 我有一個遠程對象, 他有很多屬性和對應的getter和setter方法, 如果我們遠程調用對象每個屬性的getter和setter方法, 就會產生很多遠程方法調用. 這就是fine-grained, 會造成性能問題。所以我們可以用一個setdata或getdata的方法把一些屬性的訪問封裝起來, 只用一個遠程方法傳輸一個data transfer object來對該對象進行賦值和訪問, 這就是coarse-grained。Google Chubby中用來表示鎖的粒度。coarse-grained指的是分布式鎖的持有時間可以很長并不斷延長鎖的持有時間,這樣的好處在于對鎖服務器的壓力較小,難點在于鎖服務端宕機恢復需要恢復鎖的狀態,find-grained指的是分布式鎖的持有時間一般是秒級或者毫秒級,這樣的好處在于鎖服務器宕機恢復不必維持原有鎖的狀態,但這種簡單的設計方法導致服務器壓力很大,不容易擴展到大集群。Google的設計一開始就把集群的線性擴展放到了一個很重要的位置,所以Google Chubby里面使用了coarse-grained的設計。客戶端可以簡單地在coarse-grained鎖的基礎上擴展出一個fine-grained的鎖,具體請看Chubby論文:scholar.google.cn/scholar

大牛的文章固然不錯,不過肯定不大好懂,下面我說一下我對文章的翻譯+理解:

1. Google Bigtable只支持最簡單的insert, update, del, ...等函數調用API,不支持SQL形式的API,這個轉換工作放到了Megastore層次上來做。SQL對于異步Bigtable調用的支持需要仔細考慮。

2. 對于索引支持文章中已經說得很明顯了,維護一個<索引,row_key>的索引表,更新時先更新數據表再更新索引表,索引項越多,更新效率越低,但是讀基本不怎么影響,特別適合互聯網這種讀/寫比例一般超過10倍的應用。

3. Megastore不提供通用的分布式事務支持,分布式事務僅僅限于同一個entity group。Bigtable支持單行的事務,而Megastore支持row key前綴相同的多行事務,如一個用戶的blog, post, photo,可以將它們存在到Bigtable的一張表中,row key為user_id + blog_id + post_id + photo_id,這樣同一個user的數據即為一個entity group。然而,這樣就導致不能支持像百付寶、支付寶等電子商務轉賬事務,我暫時也還不清楚支持同一個entity group內部的事務意義有多大,即有多少web應用需要這種同一個entity group下的事務支持。

4. Megastore支持事務的方式當然還是傳統的Two-phase commit協議,為了解決這個協議中協調者失效導致的問題,引入Paxos協議(Google Chubby)使協調者不再成為單點。具體做起來會非常復雜,這里提供超級大牛Jim Gray和Lamport的一篇論文供大家參考:scholar.google.com/scholar 個人認為Oracle的事務內部是一個基本的Two-phase commit協議,協調者宕機時由Oracle DBA手工介入,由于其復雜性,對DBA要求很高,所以Taobao一直網羅國內頂級DBA牛人。

5. Megastore具體事務實現時會借用Bigtable 原有的機制來實現commit log, replication等功能。可能的實現為:建一張專門的Entity group root表,加載Entity group root表的Tablet Server做為協調者角色進行分布式事務控制。然而問題在于加載Entity group root表的Tablet Server是一個單點,實現多個Tablet Server服務同一個Bigtable Tablet又是一件極其困難的事情。

6. Megastore不支持復雜的Join操作,這和互聯網公司應用性質相關。Join操作一般不要求強一致性,可以通過多表冗余方式實現。

7. 事務的并發控制采用最優控制策略。簡單來說,就是事務過程中不要鎖住其它事務操作,提交的時候看一下是否與其它事務沖突,如果有沖突則重試。Megastore實現時沒有rollback,失敗了都是retry,宕機了就回放操作日志。

8. Megastore/Bigtable的實現者認為讓用戶自己指定entity group, locality group是合理的(和數據存儲位置相關)。這樣的效果是同一個entity group的數據經常存放在一臺機器上,分布式事務的性能損耗較小,這也就說明在分布式系統中,沒有代價的scalable是不存在的,要想獲得scalable和性能,就必須犧牲關系數據庫的一些特性及用戶的易用性。

上述均為個人的粗淺看法,如何避免協調者的單點等很多問題還沒有想清楚,Bigtable和Megastore的replication策略看起來也有些沖突,想清楚后將續寫!

為了在可用性和效率之間權衡,Dynamo的設計中允許用戶指定讀/寫個數R和W值。R和W分別表示每個讀/寫操作需要操作的副本數。只要滿足R+W > K,就可以保證在存在不超過一臺機器故障的時候,至少能夠讀到一份有效的數據。如果應用重視讀效率,可以設置W = K, R = 1;如果應用需要在讀/寫之間權衡,一般可設置W = 2, R = 2,K = 3。

問題1:Dynamo中如何解決網絡分區問題?

前面已經提到,DHT協議本身是無法處理網絡分區的。在Dynamo中,引入種子節點,服務器定期向種子節點輪詢整個機群的機器信息,種子節點的選擇符合一定的策略使得網絡分區問題出現概率降至工程可以接受的水平。

問題2:如何將數據復制到多個數據中心?

每份數據都被復制到N, N+1, ..., N+K-1這K臺機器中,為了保證這些機器屬于不同的數據中心,需要合理地設計獲取數據節點號的Hash算法。當然,Dynamo通過直接手工配置每臺機器的編號解決。看起來很山寨,不過很實用,呵呵。 閱讀全文

類別:默認分類 查看評論

文章來源:http://hi.baidu.com/knuthocean/blog/item/f085d72a06d4ee27d52af170.html DHT全稱Distributed Hash Table (en.wikipedia.org/wiki/Distributed_hash_table),在P2P系統中經常用來定位數據所屬機器。這就涉及到一致哈希(consistent hashing)思想,分布式系統中經常出現機器上下線,如果采用通常的Hash方法來查找數據所屬機器,機器上下線將導致整個集群的數據分布被打亂。這是因為,機器上下線將導致機器序號及Hash函數的改變,一致哈希做了簡單調整:每臺機器存儲哈希值和它最為接近的數據。在Chord系統中,順時針到達的第一臺機器即為最近的機器。

外部的數據可能首先傳輸至集群中的任意一臺機器,為了找到數據所屬機器,要求每臺機器維護一定的集群機器信息用于定位。最直觀的想法當然是每臺機器分別維護它的前一臺及后一臺機器的信息,機器的編號可以為機器IP的Hash值,定位機器最壞情況下復雜度為O(N)。可以采用平衡化思想來優化(如同平衡二叉樹優化數組/鏈表),使每一臺機器存儲O(logN)的集群機器信息,定位機器最壞情況下復雜度為O(logN)。

首先考慮每臺機器維護前一臺及后一臺機器信息的情況,這里的問題是機器上下線導致緩存信息的不一致,我們需要設計協議使得在確定一段比較短的時間內能夠糾正這種錯誤。對于新機器加入,首先進行一次查找操作找到該機器的下一臺機器,并記錄下一臺機器的信息。機器內的每臺機器都定時向它的后繼發送心跳信息,如果后繼記錄的前一臺機器編號在二者之間,說明有新機器加入,這時需要更新后一臺機器編號為新加入編號;收到心跳信息的后繼也需要檢查,如果發送心跳的機器編號較為接近則更新為前一臺機器。機器下線將導致機器循環鏈表斷開,所以,每臺機器都維護了R個(一般取R值為3)最近的后繼信息,發現后繼節點下線時將通知其它后繼節點并加入新的替換節點。如果R個后繼節點同時下線,需要操作人員手工介入修復循環鏈。

Chord中的每臺機器維護O(logN)的機器信息是一種空間換時間的做法,實現時需要引入額外的消息交換協議。這種做法依賴于如下前提:每臺機器維護的前一臺機器及后一臺機器除了短時間不一致外總是正確的。

問題1:機器緩存短時間不一致有什么影響?數據正確性依靠什么保證?

短時間可能出現緩存的機器信息不正確的情況。比如有A, C, D, E四臺機器,再加入一臺機器B,機器加入的過程中,原本屬于B的數據可能寫入到B或者C,這都是允許的。又如刪除機器C,訪問機器C的節點得不到數據。數據的可用性及一致性還需要通過額外的replication及沖突處理協議解決。

問題2:DHT能否處理網絡分區問題?

DHT不能處理網絡分區問題,理論上存在整個DHT被分成多個子集的情況。我想,這時侯需要外部的機制介入,如維護一臺外部機器保存所有機器列表等。 閱讀全文

類別:默認分類 查看評論

文章來源:http://hi.baidu.com/knuthocean/blog/item/cca1e711221dcfcca6ef3f1d.html Amazon Dynamo是組合使用P2P各種技術的經典論文,對單機key-value存儲系統擴展成分布式系統有借鑒意義,值得仔細推敲。本人準備近期深入閱讀該論文,并寫下讀書筆記自娛自樂。當然,如果有志同道合的同學非常歡迎交流。以下是閱讀計劃:

1. 一切從DHT開始。Dynamo首先要解決的就是給定關鍵字key找出服務節點的問題。Dynamo的思想與Chord有些類似,我們可以拋開replication問題,看看Chord和Dynamo是如何通過應用DHT解決服務節點定位問題的。這里面的難點當然是節點加入和刪除,尤其是多個節點并發加入/刪除。建議預先閱讀Chord論文:scholar.google.com/scholar 。

2. Dynamo的replication。理解了DHT,我們需要結合replication理解服務節點定位及錯誤處理等問題。

3. Dynamo錯誤處理。這里包括兩種類型的錯誤,一種是暫時性的,如由于程序bug core dump后重啟,另外一種是永久性的,這里用到了Merkle Tree同步技術。

4. Dynamo讀/寫流程設計及沖突解決。這里主要涉及到一致性模型。Dynamo允許根據應用配置R和W值來權衡效率及Availability,并使用了Lamport的Vector Clock來解決沖突。

5. Dynamo優化。主要是Load rebalance的優化。

6. Dynamo實現。如果讓我們自己來實現Dynamo,我們應該如何劃分模塊以及實現過程中有哪些關鍵問題?

后續將按照計劃對每個問題做閱讀筆記 :) 閱讀全文

類別:默認分類 查看評論

文章來源:http://hi.baidu.com/knuthocean/blog/item/8838ad34f9ae1dbdd0a2d3d7.html 推薦兩本分布式系統方面書籍:

1. <<Distributed Systems - Principles and Paradigms>> Andrew S. Tanenbaum www.china-pub.com/40777&ref=ps Tanenbaum出品,必屬精品。本書條理清晰,涉及到分布式系統的方方面面,通俗易懂并附錄了分布式系統各個經典問題的論文閱讀資料,是分布式系統入門的不二選擇。感覺和以前看過的<<Introduction to Algorithm>>一樣,讀起來讓人心曠神怡,建議通讀。

2. <<Introduction to Distributed Algorithms>> Gerard Tel www.china-pub.com/13102&ref=ps 我們老大推薦的書籍。雖然從名字看是入門型書籍,不過內容一點都不好懂,適合有一定基礎的同學。另外,千萬要注意,一定要買英文原版。 閱讀全文

類別:默認分類 查看評論

文章來源:http://hi.baidu.com/knuthocean/blog/item/8838ad34fbfb1fbdd1a2d364.html

OSDI ‘04

Best Paper:

Recovering Device Drivers

Michael M. Swift, Muthukaruppan Annamalai, Brian N. Bershad, and Henry M. Levy, University of Washington

Best Paper:

Using Model Checking to Find Serious File System Errors

Junfeng Yang, Paul Twohey, and Dawson Engler, Stanford University; Madanlal Musuvathi, Microsoft Research

LISA ‘04

Best Paper:

Scalable Centralized Bayesian Spam Mitigation with Bogofilter

Jeremy Blosser and David Josephsen, VHA, Inc.

Security ‘04

Best Paper:

Understanding Data Lifetime via Whole System Simulation

Jim Chow, Ben Pfaff, Tal Garfinkel, Kevin Christopher, and Mendel Rosenblum, Stanford University

Best Student Paper:

Fairplay—A Secure Two-Party Computation System

Dahlia Malkhi and Noam Nisan, Hebrew University; Benny Pinkas, HP Labs; Yaron Sella, Hebrew University

2004 USENIX Annual Technical Conference

Best Paper:

Handling Churn in a DHT

Sean Rhea and Dennis Geels, University of California, Berkeley; Timothy Roscoe, Intel Research, Berkeley; John Kubiatowicz, University of California, Berkeley

Best Paper:

Energy Efficient Prefetching and Caching

Athanasios E. Papathanasiou and Michael L. Scott, University of Rochester

FREENIX Track

Best Paper:

Wayback: A User-level Versioning File System for Linux

Brian Cornell, Peter A. Dinda, and Fabián E. Bustamante, Northwestern University

Best Student Paper:

Design and Implementation of Netdude, a Framework for Packet Trace Manipulation

Christian Kreibich, University of Cambridge, UK

VM ‘04

Best Paper:

Semantic Remote Attestation—A Virtual Machine Directed Approach to Trusted Computing

Vivek Haldar, Deepak Chandra, and Michael Franz, University of California, Irvine

FAST ‘04

Best Paper:

Row-Diagonal Parity for Double Disk Failure Correction

Peter Corbett, Bob English, Atul Goel, Tomislav Grcanac, Steven Kleiman, James Leong, and Sunitha Sankar, Network Appliance, Inc.

Best Student Paper:

Improving Storage System Availability with D-GRAID

Muthian Sivathanu, Vijayan Prabhakaran, Andrea C. Arpaci-Dusseau, and Remzi H. Arpaci-Dusseau, University of Wisconsin, Madison

Best Student Paper:

A Framework for Building Unobtrusive Disk Maintenance Applications

Eno Thereska, Jiri Schindler, John Bucy, Brandon Salmon, Christopher R. Lumb, and Gregory R. Ganger, Carnegie Mellon University

NSDI ‘04

Best Paper:

Trickle: A Self-Regulating Algorithm for Code Propagation and Maintenance in Wireless Sensor Networks Philip Levis, University of California, Berkeley, and Intel Research Berkeley; Neil Patel, University of California, Berkeley; David Culler, University of California, Berkeley, and Intel Research Berkeley; Scott Shenker, University of California, Berkeley, and ICSI

Best Student Paper:

Listen and Whisper: Security Mechanisms for BGP

Lakshminarayanan Subramanian, University of California, Berkeley; Volker Roth, Fraunhofer Institute, Germany; Ion Stoica, University of California, Berkeley; Scott Shenker, University of California, Berkeley, and ICSI; Randy H. Katz, University of California, Berkeley

2003

LISA ‘03

Award Paper:

STRIDER: A Black-box, State-based Approach to Change and Configuration Management and Support Yi-Min Wang, Chad Verbowski, John Dunagan, Yu Chen, Helen J. Wang, Chun Yuan, and Zheng Zhang, Microsoft Research

Award Paper:

Distributed Tarpitting: Impeding Spam Across Multiple Servers

Tim Hunter, Paul Terry, and Alan Judge, eircom.net

BSDCon ‘03

Best Paper:

Cryptographic Device Support for FreeBSD

Samuel J. Leffler, Errno Consulting

Best Student Paper:

Running BSD Kernels as User Processes by Partial Emulation and Rewriting of Machine Instructions Hideki Eiraku and Yasushi Shinjo, University of Tsukuba

12th USENIX Security Symposium

Best Paper:

Remote Timing Attacks Are Practical

David Brumley and Dan Boneh, Stanford University

Best Student Paper:

Establishing the Genuinity of Remote Computer Systems

Rick Kennell and Leah H. Jamieson, Purdue University

2003 USENIX Annual Technical Conference

Award Paper:

Undo for Operators: Building an Undoable E-mail Store

Aaron B. Brown and David A. Patterson, University of California, Berkeley

Award Paper:

Operating System I/O Speculation: How Two Invocations Are Faster Than One

Keir Fraser, University of Cambridge Computer Laboratory; Fay Chang, Google Inc.

FREENIX Track Best Paper:

StarFish: Highly Available Block Storage

Eran Gabber, Jeff Fellin, Michael Flaster, Fengrui Gu, Bruce Hillyer, Wee Teck Ng, Banu Özden, and Elizabeth Shriver, Lucent Technologies, Bell Labs

Best Student Paper:

Flexibility in ROM: A Stackable Open Source BIOS

Adam Agnew and Adam Sulmicki, University of Maryland at College Park; Ronald Minnich, Los Alamos National Labs; William Arbaugh, University of Maryland at College Park

First International Conference on Mobile Systems, Applications, and Services

Best Paper:

Energy Aware Lossless Data Compression

Kenneth Barr and Krste Asanovic, Massachusetts Institute of Technology

2nd USENIX Conference on File and Storage Technologies

Best Paper:

Using MEMS-Based Storage in Disk Arrays

Mustafa Uysal and Arif Merchant, Hewlett-Packard Labs; Guillermo A. Alvarez, IBM Almaden Research Center

Best Student Paper:

Pond: The OceanStore Prototype

Sean Rhea, Patrick Eaton, Dennis Geels, Hakim Weatherspoon, Ben Zhao, and John Kubiatowicz, University of California, Berkeley

4th USENIX Symposium on Internet Technologies and Systems

Best Paper:

SkipNet: A Scalable Overlay Network with Practical Locality Properties

Nicholas J. A. Harvey, Microsoft Research and University of Washington; Michael B. Jones, Microsoft Research; Stefan Saroiu, University of Washington; Marvin Theimer and Alec Wolman, Microsoft Research

Best Student Paper:

Scriptroute: A Public Internet Measurement Facility

Neil Spring, David Wetherall, and Tom Anderson, University of Washington

2002

5th Symposium on Operating Systems Design and Implementation

Best Paper:

Memory Resource Management in VMware ESX Server

Carl A. Waldspurger, VMware, Inc.

Best Student Paper:

An Analysis of Internet Content Delivery Systems

Stefan Saroiu, Krishna P. Gummadi, Richard J. Dunn, Steven D. Gribble, and Henry M. Levy, University of Washington

LISA ‘02: 16th Systems Administration Conference

Best Paper:

RTG: A Scalable SNMP Statistics Architecture for Service Providers

Robert Beverly, MIT Laboratory for Computer Science

Best Paper:

Work-Augmented Laziness with the Los Task Request System

Thomas Stepleton, Swarthmore College Computer Society

11th USENIX Security Symposium

Best Paper:

Security in Plan 9

Russ Cox, MIT LCS; Eric Grosse and Rob Pike, Bell Labs; Dave Presotto, Avaya Labs and Bell Labs; Sean Quinlan, Bell Labs

Best Student Paper:

Infranet: Circumventing Web Censorship and Surveillance

Nick Feamster, Magdalena Balazinska, Greg Harfst, Hari Balakrishnan, and David Karger, MIT

2nd Java Virtual Machine Research and Technology Symposium

Best Paper:

An Empirical Study of Method In-lining for a Java Just-in-Time Compiler

Toshio Suganuma, Toshiaki Yasue, and Toshio Nakatani, IBM Tokyo Research Laboratory

Best Student Paper:

Supporting Binary Compatibility with Static Compilation

Dachuan Yu, Zhong Shao, and Valery Trifonov, Yale University

2002 USENIX Annual Technical Conference

Best Paper:

Structure and Performance of the Direct Access File System

Kostas Magoutis, Salimah Addetia, Alexandra Fedorova, and Margo I. Seltzer, Harvard University; Jeffrey S. Chase, Andrew J. Gallatin, Richard Kisley, and Rajiv G. Wickremesinghe, Duke University; and Eran Gabber, Lucent Technologies

Best Student Paper:

EtE: Passive End-to-End Internet Service Performance Monitoring

Yun Fu and Amin Vahdat, Duke University; Ludmila Cherkasova and Wenting Tang, Hewlett-Packard Laboratories

FREENIX Track

Best FREENIX Paper:

CPCMS: A Configuration Management System Based on Cryptographic Names

Jonathan S. Shapiro and John Vanderburgh, Johns Hopkins University

Best FREENIX Student Paper:

SWILL: A Simple Embedded Web Server Library

Sotiria Lampoudi and David M. Beazley, University of Chicago

BSDCon ‘02

Best Paper:

Running “fsck” in the Background Marshall Kirk McKusick, Author and Consultant

Best Paper:

Design And Implementation of a Direct Access File System (DAFS) Kernel Server for FreeBSD

Kostas Magoutis, Division of Engineering and Applied Sciences, Harvard University

Conference on File and Storage Technologies

Best Paper:

VENTI - A New Approach to Archival Data Storage

Sean Quinlan and Sean Dorward, Bell Labs, Lucent Technologies

Best Student Paper:

Track-aligned Extents: Matching Access Patterns to Disk Drive Characteristics

Jiri Schindler, John Linwood Griffin, Christopher R. Lumb, Gregory R. Ganger, Carnegie Mellon University

類別:默認分類 查看評論

文章來源:http://hi.baidu.com/knuthocean/blog/item/8218034f4a01523caec3ab1c.html 前幾天有同學問分布式系統方向有哪些會議。網上查了一下,頂級的會議是OSDI(Operating System Design and Implementation)和SOSP(Symposium on Operating System Principles)。其它幾個會議,如NSDI,FAST,VLDB也常常有讓人眼前一亮的論文。值得慶幸的是,現在云計算太火了,GFS/Mapreduce/Bigtable等工程性文章都發表在最牛的OSDI上,并且Google Bigtable和Microsoft的Dryad LINQ還獲得了最佳論文獎。下面列出了每個會議的歷年最佳論文,希望我們可以站在一個制高點上。

USENIX ‘09

Best Paper:

Satori: Enlightened Page Sharing

Grzegorz Miłoś, Derek G. Murray, and Steven Hand, University of Cambridge Computer Laboratory; Michael A. Fetterman, NVIDIA Corporation

Best Paper:

Tolerating File-System Mistakes with EnvyFS

Lakshmi N. Bairavasundaram, NetApp., Inc.; Swaminathan Sundararaman, Andrea C. Arpaci-Dusseau, and Remzi H. Arpaci-Dusseau, University of Wisconsin—Madison

NSDI ‘09

Best Paper:

TrInc: Small Trusted Hardware for Large Distributed Systems

Dave Levin, University of Maryland; John R. Douceur, Jacob R. Lorch, and Thomas Moscibroda, Microsoft Research

Best Paper:

Sora: High Performance Software Radio Using General Purpose Multi-core Processors

Kun Tan and Jiansong Zhang, Microsoft Research Asia; Ji Fang, Beijing Jiaotong University; He Liu, Yusheng Ye, and Shen Wang, Tsinghua University; Yongguang Zhang, Haitao Wu, and Wei Wang, Microsoft Research Asia; Geoffrey M. Voelker, University of California, San Diego

FAST ‘09

Best Paper:

CA-NFS: A Congestion-Aware Network File System

Alexandros Batsakis, NetApp and

Generating Realistic Impressions for File-System Benchmarking

Nitin Agrawal, Andrea C. Arpaci-Dusseau, and Remzi H. Arpaci-Dusseau,

OSDI ‘08

Jay Lepreau Best Paper:

Difference Engine: Harnessing Memory Redundancy in Virtual Machines

Diwaker Gupta, University of California, San Diego; Sangmin Lee, University of Texas at Austin; Michael Vrable, Stefan Savage, Alex C. Snoeren, George Varghese, Geoffrey M. Voelker, and Amin Vahdat, University of California, San Diego

Jay Lepreau Best Paper:

DryadLINQ: A System for General-Purpose Distributed Data-Parallel Computing Using a High-Level Language

Yuan Yu, Michael Isard, Dennis Fetterly, and Mihai Budiu, Microsoft Research Silicon Valley; Úlfar Erlingsson, Reykjavík University, Iceland, and Microsoft Research Silicon Valley; Pradeep Kumar Gunda and Jon Currey, Microsoft Research Silicon Valley

Jay Lepreau Best Paper:

KLEE: Unassisted and Automatic Generation of High-Coverage Tests for Complex Systems Programs

Cristian Cadar, Daniel Dunbar, and

LISA ‘08

Best Paper:

ENAVis: Enterprise Network Activities Visualization

Qi Liao, Andrew Blaich, Aaron Striegel, and Douglas Thain, University of Notre Dame

Best Student Paper:

Automatic Software Fault Diagnosis by Exploiting Application Signatures

Xiaoning Ding, The Ohio State University; Hai Huang, Yaoping Ruan, and Anees Shaikh, IBM T.J. Watson Research Center; Xiaodong Zhang, The Ohio State University

USENIX Security ‘08

Best Paper:

Highly Predictive Blacklisting

Jian Zhang and Phillip Porras, SRI International; Johannes Ullrich, SANS Institute

Best Student Paper:

Lest We Remember: Cold Boot Attacks on Encryption Keys

J. Alex Halderman, Princeton University; Seth D. Schoen, Electronic Frontier Foundation; Nadia Heninger and William Clarkson, Princeton University; William Paul, Wind River Systems; Joseph A. Calandrino and Ariel J. Feldman, Princeton University; Jacob Appelbaum; Edward W. Felten, Princeton University

USENIX ‘08

Best Paper:

Decoupling Dynamic Program Analysis from Execution in Virtual Environments

Jim Chow, Tal Garfinkel, and Peter M. Chen, VMware

Best Student Paper:

Vx32: Lightweight User-level Sandboxing on the x86

Bryan Ford and Russ Cox, Massachusetts Institute of Technology

NSDI ‘08

Best Paper:

Remus: High Availability via Asynchronous Virtual Machine Replication

Brendan Cully, Geoffrey Lefebvre, Dutch Meyer, Mike Feeley, and Norm Hutchinson, University of British Columbia; Andrew Warfield, University of British Columbia and Citrix Systems, Inc.

Best Paper:

Consensus Routing: The Internet as a Distributed System

John P. John, Ethan Katz-Bassett, Arvind Krishnamurthy, and Thomas Anderson, University of Washington; Arun Venkataramani, University of Massachusetts Amherst

LEET ‘08

Best Paper:

Designing and Implementing Malicious Hardware (PDF) or read in HTML

Samuel T. King, Joseph Tucek, Anthony Cozzie, Chris Grier, Weihang Jiang, and Yuanyuan Zhou, University of Illinois at Urbana-Champaign

FAST ‘08

Best Paper:

Portably Solving File TOCTTOU Races with Hardness Amplification

Dan Tsafrir, IBM T.J. Watson Research Center; Tomer Hertz, Microsoft Research; David Wagner, University of California, Berkeley; Dilma Da Silva, IBM T.J. Watson Research Center

An Analysis of Data Corruption in the Storage Stack

Lakshmi N. Bairavasundaram, University of Wisconsin, Madison; Garth Goodson, Network Appliance Inc.; Bianca Schroeder, University of Toronto; Andrea C. Arpaci-Dusseau and Remzi H. Arpaci-Dusseau, University of Wisconsin, Madison

2007

LISA ‘07

Best Paper:

Application Buffer-Cache Management for Performance: Running the World’s Largest MRTG

David Plonka, Archit Gupta, and Dale Carder, University of Wisconsin Madison

Best Paper:

PoDIM: A Language for High-Level Configuration Management

Thomas Delaet and Wouter Joosen, Katholieke Universiteit Leuven, Belgium

16th USENIX Security Symposium

Best Paper:

Towards Automatic Discovery of Deviations in Binary Implementations with Applications to Error Detection and Fingerprint Generation

David Brumley, Juan Caballero, Zhenkai Liang, James Newsome, and Dawn Song, Carnegie Mellon University

Best Student Paper:

Keep Your Enemies Close: Distance Bounding Against Smartcard Relay Attacks

Saar Drimer and Steven J. Murdoch, Computer Laboratory, University of Cambridge

USENIX ‘07

Best Paper:

Hyperion: High Volume Stream Archival for Retrospective Querying

Peter Desnoyers and Prashant Shenoy, University of Massachusetts Amherst

Best Paper:

SafeStore: A Durable and Practical Storage System

Ramakrishna Kotla, Lorenzo Alvisi, and Mike Dahlin, The University of Texas at Austin

NSDI ‘07

Best Paper:

Life, Death, and the Critical Transition: Finding Liveness Bugs in Systems Code

Charles Killian, James W. Anderson, Ranjit Jhala, and Amin Vahdat, University of California, San Diego

Best Student Paper:

Do Incentives Build Robustness in BitTorrent?

Michael Piatek, Tomas Isdal, Thomas Anderson, and Arvind Krishnamurthy, University of Washington; Arun Venkataramani, University of Massachusetts Amherst

FAST ‘07

Best Paper:

Disk Failures in the Real World: What Does an MTTF of 1,000,000 Hours Mean to You?

Bianca Schroeder and Garth A. Gibson, Carnegie Mellon University

Best Paper:

TFS: A Transparent File System for Contributory Storage

James Cipar, Mark D. Corner, and Emery D. Berger, University of Massachusetts Amherst

LISA ‘06

Best Paper:

A Platform for RFID Security and Privacy Administration

Melanie R. Rieback, Vrije Universiteit Amsterdam; Georgi N. Gaydadjiev, Delft University of Technology; Bruno Crispo, Rutger F.H. Hofman, and Andrew S. Tanenbaum, Vrije Universiteit Amsterdam

Honorable Mention:

A Forensic Analysis of a Distributed Two-Stage Web-Based Spam Attack

Daniel V. Klein, LoneWolf Systems

OSDI ‘06

Best Paper:

Rethink the Sync

Edmund B. Nightingale, Kaushik Veeraraghavan, Peter M. Chen, and Jason Flinn, University of Michigan

Best Paper:

Bigtable: A Distributed Storage System for Structured Data

Fay Chang, Jeffrey Dean, Sanjay Ghemawat, Wilson C. Hsieh, Deborah A. Wallach, Mike Burrows, Tushar Chandra, Andrew Fikes, and Robert E. Gruber, Google, Inc.

15th USENIX Security Symposium

Best Paper:

Evaluating SFI for a CISC Architecture

Stephen McCamant, Massachusetts Institute of Technology; Greg Morrisett, Harvard University

Best Student Paper:

Keyboards and Covert Channels

Gaurav Shah, Andres Molina, and Matt Blaze, University of Pennsylvania

2006 USENIX Annual Technical Conference

Best Paper:

Optimizing Network Virtualization in Xen

Aravind Menon, EPFL; Alan L. Cox, Rice University; Willy Zwaenepoel, EPFL

Best Paper:

Replay Debugging for Distributed Applications

Dennis Geels, Gautam Altekar, Scott Shenker, and Ion Stoica, University of California, Berkeley

NSDI ‘06

Best Paper:

Experience with an Object Reputation System for Peer-to-Peer Filesharing

Kevin Walsh and Emin Gün Sirer, Cornell University

Availability of Multi-Object Operations

Haifeng Yu, Intel Research Pittsburgh and Carnegie Mellon University; Phillip B. Gibbons, Intel Research Pittsburgh; Suman Nath, Microsoft Research

2005

FAST ‘05

Best Paper:

Ursa Minor: Versatile Cluster-based Storage

Michael Abd-El-Malek, William V. Courtright II, Chuck Cranor, Gregory R. Ganger, James Hendricks, Andrew J. Klosterman, Michael Mesnier, Manish Prasad, Brandon Salmon, Raja R. Sambasivan, Shafeeq Sinnamohideen, John D. Strunk, Eno Thereska, Matthew Wachs, and Jay J. Wylie, Carnegie Mellon University

Best Paper:

On Multidimensional Data and Modern Disks

Steven W. Schlosser, Intel Research Pittsburgh; Jiri Schindler, EMC Corporation; Stratos Papadomanolakis, Minglong Shao, Anastassia Ailamaki, Christos Faloutsos, and Gregory R. Ganger, Carnegie Mellon University

LISA ‘05

Best Paper:

Toward a Cost Model for System Administration

Alva L. Couch, Ning Wu, and Hengky Susanto, Tufts University

Best Student Paper:

Toward an Automated Vulnerability Comparison of Open Source IMAP Servers

Chaos Golubitsky, Carnegie Mellon University

Best Student Paper:

Reducing Downtime Due to System Maintenance and Upgrades

Shaya Potter and Jason Nieh, Columbia University

IMC 2005

Best Student Paper:

Measurement-based Characterization of a Collection of On-line Games

Chris Chambers and Wu-chang Feng, Portland State University; Sambit Sahu and Debanjan Saha, IBM Research

Security ‘05

Best Paper:

Mapping Internet Sensors with Probe Response Attacks

John Bethencourt, Jason Franklin, and Mary Vernon University of Wisconsin, Madison

Best Student Paper:

Security Analysis of a Cryptographically-Enabled RFID Device

Steve Bono, Matthew Green, and Adam Stubblefield, Johns Hopkins University; Ari Juels, RSA Laboratories; Avi Rubin, Johns Hopkins University; Michael Szydlo, RSA Laboratories

MobiSys ‘05

Best Paper:

Reincarnating PCs with Portable SoulPads

Ramón Cáceres, Casey Carter, Chandra Narayanaswami, and Mandayam Raghunath, IBM T.J. Watson Research Center

NSDI ‘05

Best Paper:

Detecting BGP Configuration Faults with Static Analysis

Nick Feamster and Hari Balakrishnan, MIT Computer Science and Artificial Intelligence Laboratory

Best Student Paper:

Botz-4-Sale: Surviving Organized DDoS Attacks That Mimic Flash Crowds

Srikanth Kandula and Dina Katabi, Massachusetts Institute of Technology; Matthias Jacob, Princeton University; Arthur Berger, Massachusetts Institute of Technology/Akamai

2005 USENIX Annual Technical Conference

General Track

Best Paper:

Debugging Operating Systems with Time-Traveling Virtual Machines

Samuel T. King, George W. Dunlap, and Peter M. Chen, University of Michigan

Best Student Paper:

Itanium—A System Implementor’s Tale

Charles Gray, University of New South Wales; Matthew Chapman and Peter Chubb, University of New South Wales and National ICT Australia; David Mosberger-Tang, Hewlett-Packard Labs; Gernot Heiser, University of New South Wales and National ICT Australia

Best Paper:

USB/IP—A Peripheral Bus Extension for Device Sharing over IP Network

Takahiro Hirofuchi, Eiji Kawai, Kazutoshi Fujikawa, and Hideki Sunahara, Nara Institute of Science and Technology

閱讀全文

類別:默認分類 查看評論

文章來源:http://hi.baidu.com/knuthocean/blog/item/7f32925830ed16d49d82040f.html 分布式系統設計開發過程中有幾個比較有意思的現象:

1. CAP原理。CAP分別表示Consistency(一致性), Availability(可訪問性), Partition-tolerance(網絡分區容忍性)。Consistency指強一致性,符合ACID;Availability指每一個請求都能在確定的時間內返回結果;Partition-tolerance指系統能在網絡被分成多個部分,即允許任意消息丟失的情況下正常工作。CAP原理指出,CAP三者最多取其二,沒有完美的結果。因此,我們設計replication策略、一致性模型、分布式事務時都應該有所折衷。

2. 一致性的不可能性原理。該原理指出在允許失敗的異步系統下,進程間是不可能達成一致的。典型的問題就是分布式選舉問題,實際系統如Bigtable的tablet加載問題。所以,Google Chubby/Hadoop Zookeeper實現時都需要對服務器時鐘誤差做一個假設。當時鐘出現不一致時,工作機只能下線以防止出現不正確的結果。

3. 錯誤必然出現原理。只要是理論上有問題的設計/實現,運行時一定會出現,不管概率有多低。如果沒有出現問題,要么是穩定運行時間不夠長,要么是壓力不夠大。

4. 錯誤的必然復現原則。實踐表明,分布式系統測試中發現的錯誤等到數據規模增大以后必然會復現。分布式系統中出現的多機多線程問題有的非常難于排查,但是,沒關系,根據現象推測原因并補調試日志吧,加大數據規模,錯誤肯定會復現的。

5. 兩倍數據規模原則。實踐表明,分布式系統最大數據規模翻番時,都會發現以前從來沒有出現過的問題。這個原則當然不是準確的,不過可以指導我們做開發計劃。不管我們的系統多么穩定,不要高興太早,數據量翻番一定會出現很多意想不到的情況。不信就試試吧!

閱讀全文

類別:默認分類 查看評論

文章來源:http://hi.baidu.com/knuthocean/blog/item/d291ab64301ddbfaf73654bc.html Hypertable和Hbase二者同源,設計也有諸多相似之處,最主要的區別當然還是編程語言的選擇。Hbase選擇Java主要是因為Apache和Hadoop的公共庫、歷史項目基本都采用該語言,并且Java項目在設計模式和文檔上一般都比C++項目好,非常適合開源項目。C++的優勢當然還是在性能和內存使用上。Yahoo曾經給出了一個很好的Terasort結果(perspectives.mvdirona.com/2008/07/08/HadoopWinsTeraSort.aspx),它們認為對于大多數Mapreduce任務,比如分布式排序,性能瓶頸在于IO和網絡,Java和C++在性能上基本沒有區別。不過,使用Java的Mapreduce在每臺服務器上明顯使用了更多的CPU和內存,如果用于分布式排序的服務器還需要部署其它的CPU/內存密集型應用,Java的性能劣勢將顯現。對于Hypertable/HBase這樣的表格系統,Java的選擇將帶來如下問題:

1. Hyertable/Hbase是內存和CPU密集型的。Hypertable/Hbase采用Log-Structured Merge Tree設計,系統可以使用的內存直接決定了系統性能。內存中的memtable和表格系統內部的緩存都大量使用內存,可使用的內存減少將導致merge-dump頻率加大,直接加重底層HDFS的壓力。另外,讀取和dump操作大量的歸并操作也可能使CPU成為一個瓶頸,再加上對數據的壓縮/解壓縮,特別是Bigtable中最經常使用的BM-diff算法在壓縮/解壓縮過程完全跑滿一個CPU核,很難想象Java實現的Hbase能夠與C++實現的Hypertable在性能上抗衡。

2. Java垃圾回收。目前Java虛擬機垃圾回收時將停止服務一段時間,這對Hypertable/HBase中大量使用的Lease機制是一個很大的考驗。雖然Java垃圾回收可以改進,但是企圖以通用的方式完全解決內存管理問題是不現實的。內存管理沒有通用做法,需要根據應用的訪問模式采取選擇不同的策略。

當然,Hadoop由于采用了Java設計,導致開源合作變得更加容易,三大核心系統之上開發的輔助系統,如Hadoop的監控,Pig等都相當成功。所以,我的觀點依然是:對于三駕馬車的核心系統,采用C++相對合理;對于輔助模塊,Java是一個不錯的選擇。 閱讀全文

類別:默認分類 查看評論

文章來源:http://hi.baidu.com/knuthocean/blog/item/ef201038f5d866f8b311c746.html 對于Web應用來說,RDBMS在性能和擴展性上有著天生的缺陷,而key-value存儲系統通過犧牲關系數據庫的事務和范式等要求來換取性能和擴展性,成為了不錯的替代品。key-value存儲系統設計時一般需要關注擴展性,錯誤恢復,可靠性等,大致可以分類如下:

1. “山寨“流派:國產的很多系統屬于這種類型。這種類型的系統一般不容易擴展,錯誤恢復和負載平衡等都需要人工介入。由于國內的人力成本較低,這類系統通過增加運維人員的數量來回避分布式系統設計最為復雜的幾個問題,具有強烈的中國特色。這種系統的好處在于設計簡單,適合幾臺到幾十臺服務器的互聯網應用。比如,現在很多多機mysql應用通過人工分庫來實現系統擴展,即每次系統將要到達服務上限時,增加機器重新分庫。又如,很多系統將更新節點設計成單點,再通過簡單的冗余方式來提高系統可靠性;又如,很多系統規定單個表格最大的數據量,并通過人工指定機器服務每個表格來實現負載平衡。在這樣的設計下,應用規模增加一倍,服務器和運營各項成本增加遠大于一倍,不能用來提供云計算服務。然而由于其簡單可依賴,這類系統非常適合小型互聯網公司或者大型互聯網公司的一些規模較小的產品。

2. "P2P"流派:代表作為Amazon的Dynamo。Amazon作為提供云計算服務最為成功的公司,其商業模式和技術實力都異常強大。Amazon的系統典型特點是采用P2P技術,組合使用了多種流行的技術,如DHT,Vector Clock,Merkle Tree等,并且允許配置W和R值,在可靠性和一致性上求得一個平衡。Dynamo的負載平衡需要通過簡單的人工配置機器來配合,它的很多技術點可以單獨被其它系統借鑒。如,國內的“山寨”系統可以借鑒Dynamo的設計提高擴展性。

3. Google流派:代表作有Google的三駕馬車:GFS+Mapreduce+Bigtable。這種系統屬于貴族流派,模仿者眾多,知名的有以Yahoo為代表的Hadoop, 與Hadoop同源的Hypertable以及國內外眾多互聯網公司。Google的設計從數據中心建設,服務器選購到系統設計,數據存儲方式(數據壓縮)到系統部署都有一套指導原則,自成體系。如Hadoop的HDFS設計時不支持多個客戶端同時并發Append操作,導致后續的HBase及Hypertable實現極其困難。模仿者雖多,成功者少,HBase和Hypertable都在響應的延時及宕機恢復上有一系列的問題,期待后續發布的版本能有較大的突破。小型互聯網公司可以使用Hadoop的HDFS和Mapreduce,至于類似Hbase/Hypertable的表格系統,推薦自己做一個“山寨”版后不斷優化。

4. 學院派:這種類型的系統為研究人員主導,設計一般比較復雜,實現的時候以Demo為主。這類系統代表未來可能的方向,但實現的Demo可能有各種各樣的問題,如有的系統不能長期穩定運行,又如,有的系統不支持異構的機器環境。本人對這類系統知之甚少,著名的類Mapreduce系統Microsoft Dryad看起來有這種味道。

【注:"山寨“如山寨手機,山寨開心網主要表示符合中國國情,非貶義】 閱讀全文

類別:默認分類 查看評論

文章來源:http://hi.baidu.com/knuthocean/blog/item/ae38ebf8891acb05d9f9fdb9.html 從Google App Engine中挖出的關于Megastore/Bigtable跨數據中心replication的文章,里面有提到一點點實現,希望對理解Bigtable及其衍生品的replication機制有用。我想指出幾點:

1. Bigtable的跨機房replication是保證最終一致性的,Megastore是通過Paxos 將tablet變成可以被跨機房的tablet server服務的。Bigtable的問題在于機器斷電會丟數據,Megastore可以做到不丟數據,但是實現起來極其復雜。Megastore的機制對性能還有一定影響,因為Google Chubby不適合訪問量過大的環境,所以,Bigtable和Megastore這兩個team正在合作尋找一個平衡點。

2. Bigtable內部的replication是后臺進行的,按照列級別執行復制;Megastore是按照Entity group級別進行Paxos控制。為什么Bigtable按照列級別復制?難道和locality group有關?

Migration to a Better Datastore

At Google, we've learned through experience to treat everything with healthy skepticism. We expect that servers, racks, shared GFS cells, and even entire datacenters will occasionally go down, sometimes with little or no warning. This has led us to try as hard as possible to design our products to run on multiple servers, multiple cells, and even multiple datacenters simultaneously, so that they keep running even if any one (or more) redundant underlying parts go down. We call this multihoming. It's a term that usually applies narrowly, to networking alone, but we use it much more broadly in our internal language.

Multihoming is straightforward for read-only products like web search, but it's more difficult for products that allow users to read and write data in real time, like GMail, Google Calendar, and App Engine. I've personally spent a while thinking about how multihoming applies to the App Engine datastore. I even gave a talk about it at this year's Google I/O.

While I've got you captive, I'll describe how multihoming currently works in App Engine, and how we're going to improve it with a release next week. I'll wrap things up with more detail about App Engine's maintenance schedule.

Bigtable replication and planned datacenter moves

When we launched App Engine, the datastore served each application's data out of one datacenter at a time. Data was replicated to other datacenters in the background, using

For example, if the datastore was serving data for some apps from datacenter A, and we needed to switch to serving their data from datacenter B, we simply flipped the datastore to read only mode, waited for Bigtable replication to flush any remaining writes from A to B, then flipped the switch back and started serving in read/write mode from B. This generally works well, but it depends on the Bigtable cells in both A and B to be healthy. Of course, we wouldn't want to move to B if it was unhealthy, but we definitely would if B was healthy but A wasn't. Google continuously monitors the overall health of App Engine's underlying services, like GFS and Bigtable, in all of our datacenters. However, unexpected problems can crop up from time to time. When that happens, having backup options available is crucial. You may remember the unplanned outage we had a few months ago. We published a detailed postmortem; in a nutshell, the shared GFS cell we use went down hard, which took us down as well, and it took a while to get the GFS cell back up. The GFS cell is just one example of the extent to which we use shared infrastructure at Google. It's one of our greatest strengths, in my opinion, but it has its drawbacks. One of the most noticeable drawback is loss of isolation. When a piece of shared infrastructure has problems or goes down, it affects everything that uses it. In the example above, if the Bigtable cell in A is unhealthy, we're in trouble. Bigtable replication is fast, but it runs in the background, so it's usually at least a little behind, which is why we wait for that final flush before switching to B. If A is unhealthy, some of its data may be unavailable for extended periods of time. We can't get to it, so we can't flush it, we can't switch to B, and we're stuck in A until its Bigtable cell recovers enough to let us finish the flush. In extreme cases like this, we might not know how soon the data in A will become available. Rather than waiting indefinitely for A to recover, we'd like to have the option to cut our losses and serve out of B instead of A, even if it means a small, bounded amount of disruption to application data. Following our example, that extreme recovery scenario would go something like this: Naturally, when A comes back online, we can recover that unreplicated data, but if we've already started serving from B, we can't automatically copy it over from A, since there may have been conflicting writes in B to the same entities. If your app had unreplicated writes, we can at least provide you with a full dump of those writes from A, so that your data isn't lost forever. We can also provide you with tools to relatively easily apply those unreplicated writes to your current datastore serving out of B. Unfortunately, Bigtable replication on its own isn't quite enough for us to implement the extreme recovery scenario above. We use Bigtable single-row transactions, which let us do read/modify/write operations on multiple columns in a row, to make our datastore writes transactional and consistent. Unfortunately, Bigtable replication operates at the column value level, not the row level. This means that after a Bigtable transaction in A that updates two columns, one of the new column values could be replicated to B but not the other. If this happened, and we switched to B without flushing the other column value, the datastore would be internally inconsistent and difficult to recover to a consistent state without the data in A. In our July 2nd outage, it was partly this expectation of internal inconsistency that prevented us from switching to datacenter B when A became unhealthy. Thankfully, there's a solution to our consistency problem: Megastore replication. Megastore is an internal library on top of Bigtable that supports declarative schemas, multi-row transactions, secondary indices, and recently, consistent replication across datacenters. The App Engine datastore uses Megastore liberally. We don't need all of its features - declarative schemas, for example - but we've been following the consistent replication feature closely during its development. Megastore replication is similar to Bigtable replication in that it replicates data across multiple datacenters, but it replicates at the level of entire entity group transactions, not individual Bigtable column values. Furthermore, transactions on a given entity group are always replicated in order. This means that if Bigtable in datacenter A becomes unhealthy, and we must take the extreme option to switch to B before all of the data in A has flushed, B will be consistent and usable. Some writes may be stuck in A and unavailable in B, but B will always be a consistent recent snapshot of the data in A. Some scattered entity groups may be stale, ie they may not reflect the most recent updates, but we'd at least be able to start serving from B immediately, as opposed waiting for A to recover. Megastore replication was originally intended to replicate across multiple datacenters synchronously and atomically, using Paxos. Unfortunately, as I described in my Google I/O talk, the latency of Paxos across datacenters is simply too high for a low-level, developer facing storage system like the App Engine datastore. Due to that, we've been working with the Megastore team on an alternative: asynchronous, background replication similar to Bigtable's. This system maintains the write latency our developers expect, since it doesn't replicate synchronously (with Paxos or otherwise), but it's still consistent and fast enough that we can switch datacenters at a moment's notice with a minimum of unreplicated data. We've had a fully functional version of asynchronous Megastore replication for a while. We've been testing it heavily, working out the kinks, and stressing it to make sure it's robust as possible. We've also been using it in our internal version of App Engine for a couple months. I'm excited to announce that we'll be migrating the public App Engine datastore to use it in a couple weeks, on September 22nd. This migration does require some datastore downtime. First, we'll switch the datastore to read only mode for a short period, probably around 20-30 minutes, while we do our normal data replication flush, and roll forward any transactions that have been committed but not fully applied. Then, since Megastore replication uses a new transaction log format, we need to take the entire datastore down while we drop and recreate our transaction log columns in Bigtable. We expect this to only take a few minutes. After that, we'll be back up and running on Megastore replication! As described, Megastore replication will make App Engine much more resilient to hiccoughs and outages in individual datacenters and significantly reduce the likelihood of extended outages. It also opens the door to two new options which will give developers more control over how their data is read and written. First, we're exploring allowing reads from the non-primary datastore if the primary datastore is taking too long to respond, which could decrease the likelihood of timeouts on read operations. Second, we're exploring full Paxos for write operations on an opt-in basis, guaranteeing data is always synchronously replicated across datacenters, which would increase availability at the cost of additional write latency. Both of these features are speculative right now, but we're looking forward to allowing developers to make the decisions that fit their applications best! Finally, a word about our maintenance schedule. App Engine's scheduled maintenance periods usually correspond to shifts in primary application serving between datacenters. Our maintenance periods usually last for about an hour, during which application serving is continuous, but access to the Datastore and memcache may be read-only or completely unavailable. We've recently developed better visibility into when we expect to shift datacenters. This information isn't perfect, but we've heard from many developers that they'd like more advance notice from App Engine about when these maintenance periods will occur. Therefore, we're happy to announce below the preliminary maintenance schedule for the rest of 2009. We don't expect this information to change, but if it does, we'll notify you (via the App Engine Downtime Notify Google Group) as soon as possible. The App Engine team members are personally dedicated to keeping your applications serving without interruption, and we realize that weekday maintenance periods aren't ideal for many. However, we've selected the day of the week and time of day for maintenance to balance disruption to App Engine developers with availability of the full engineering teams of the services App Engine relies upon, like GFS and Bigtable. In the coming months, we expect features like Megastore replication to help reduce the length of our maintenance periods. 閱讀全文

Planning for trouble

We give up on flushing the most recent writes in A that haven't replicated to B, and switch to serving the data that is in B. Thankfully, there isn't much data in A that hasn't replicated to B, because replication is usually quite fast. It depends on the nature of the failure, but the window of unreplicated data usually only includes a small fraction of apps, and is often as small as a few thousand recent puts, deletes, and transaction commits, across all affected apps.

Megastore replication saves the day!

To Paxos or not to Paxos

Onward and upward

Planning for scheduled maintenance

類別:默認分類 查看評論

文章來源:http://hi.baidu.com/knuthocean/blog/item/12bb9f3dea0e400abba1673c.html